A PyTorch Implementation Of SimCLR

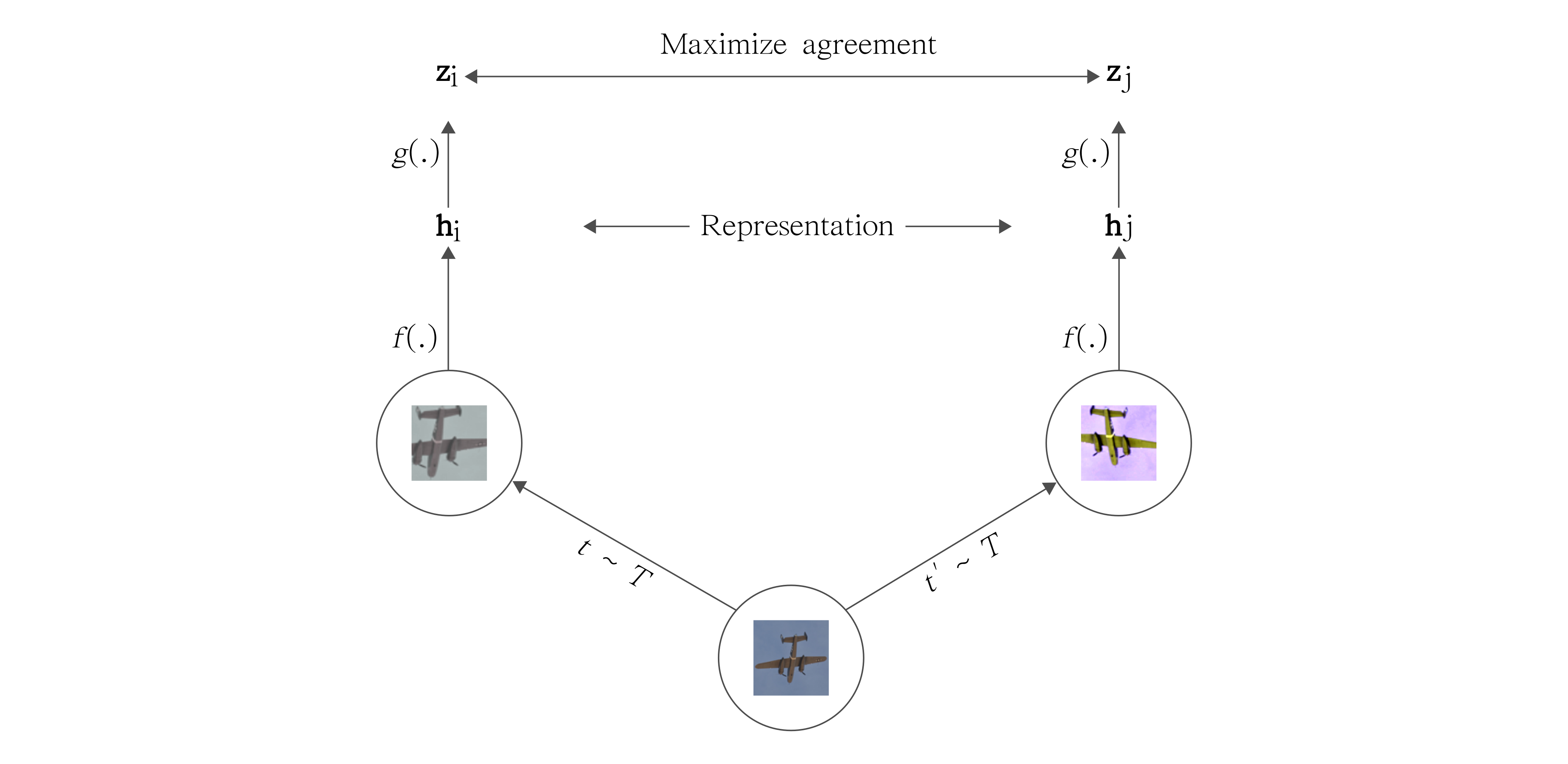

SimCLR, short for "Simple Contrastive Learning of Visual Representations," is a powerful self-supervised learning framework for learning high-quality image representations without requiring manual labels.It leverages contrastive learning, where the model is trained to pull together similar images and push apart dissimilar ones in a learned feature space.

/

│

├── Sim_CLR/

│ ├── Adam/

│ │ ├── Contrastive Training Loss per Epoch.png

│ │ ├── SIMclr_confusion_matrix.png

| | ├── training_validation_metrics_finetuning_simclr.png

| | ├── tsne_finetuning_dataset.png

| | ├── tsne_test_dataset.png

| | ├── Adam.ipynb

│ ├── SGD/

│ │ ├── Contrastive Training Loss per Epoch.png

│ │ ├── SIMclr_confusion_matrix.png

| | ├── training_validation_metrics_finetuning_simclr.png

| | ├── tsne_finetuning_dataset.png

| | ├── tsne_test_dataset.png

| | ├── SGD.ipynb

│ ├── Augmentation.png

├── Supervised_Resnet18_as_backbone/

│ ├── supervised_ Resnet18 as Feature Extractor_confusion_matrix.png

│ ├── training_validation_metrics_supervised.png

│ ├── tsne_finetuning_dataset_pretrained_resnet18.png

│ ├── tsne_test_dataset_pretrained_resnet18.png

│ ├── Supervised.ipynb

├── .gitignore

├── LICENSE

├── README.md

├── requirements.txt

In this implementation CIFAR-10 (https://www.cs.toronto.edu/~kriz/cifar.html) dataset has been used. For contrastive pretraining Resnet-18(pretrained=False) has been used as backbone. Before the pretraining, we performed two types of augmentation on the dataset, namely random cropping and color jittering.

Dataset Augmentation

Then it is pretrained for 100 epochs using Adam(Adam.ipynb) & Nesterov accelerated SGD(SGD.ipynb) and NT-Xent loss (Normalized temperature-scaled cross-entropy loss).

Image Source: Medium

After pretraining I threw away the projection head and I made a finetuning head and finetuned it using 6500 labeled datapoints for 20 epochs using Adam optimizer.

I also implemented this project using supervised approach(Supervised.ipynb).At first downloaded imagenet pretrained Resnet-18 and used it as a feature extractor for 6500 labeled datapoints and then made a finetuning head and finetuned it using those extracted features.

- At first, make sure your project environment contains all the necessary dependencies by running

pip install -r requirements.txt

- Then to run the corresponding notebooks, download them and run the cells sequentially.

- To use the trained models, download them from the links given below and change the paths in the notebooks accordingly.

-

SimCLR_Adam:

- simclr_backbone.pth->This is Resnet-18(pretrained=False) backbone, which was pretrained by contrastive learning using Adam optimizer.

- simclr_projection_head.pth->This is the projection head used in contrastive pretraining to project the images in 128 dimension and calculate NT-Xent loss, after pretraining I threw it away.

- finetuned_model.pth->This is the finetuning head which was finetuned by 6500 labeled datapoints(after feature extraction of those datapoints by contrastive pretrained Resnet-18).

-

SimCLR_SGD:

- simclr_backbone.pth->This is Resnet-18(pretrained=False) backbone, which was pretrained by contrastive learning using Nesterov accelerated SGD optimizer.

- simclr_projection_head.pth->This is the projection head used in contrastive pretraining to project the images in 128 dimension and calculate NT-Xent loss, after pretraining I threw it away.

- finetuned_model.pth->This is the finetuning head which was finetuned by 6500 labeled datapoints(after feature extraction of those datapoints by contrastive pretrained Resnet-18).

-

Supervised:

- supervised_model.pth->This is the finetuning head of supervised approach, which was used after feature extraction by imagenet pretrained Resnet-18.

All the experiments were performed on CIFAR-10 dataset.

| Method | Batch Size | Backbone | Projection Dimension | Contrastive Pretraining Epochs | Finetuning Epochs | Optimizer | Test Accuracy | Test F1 Score |

|---|---|---|---|---|---|---|---|---|

| SimCLR | 256 | ResNet18(pretrained=False) | 128 | 100 | 20 | Adam(pretraining),Adam(finetuning) | 0.6137 | 0.6122 |

| SimCLR | 256 | ResNet18(pretrained=False) | 128 | 100 | 20 | NAG(pretraining),Adam(finetuning) | 0.6884 | 0.6854 |

| Supervised | 256 | ResNet18(pretrained=True) | 1000 | - | 20 | Adam(finetuning) | 0.7558 | 0.7543 |

|

|

|---|---|

| Contrastive Training Loss per Epoch (Adam) | Contrastive Training Loss per Epoch (NAG) |

|

|

|---|---|

| Train and Validation Metrics in Finetuning of SimCLR Method | Train and Validation Metrics in Finetuning of Supervised Method |

|

|

|---|---|

| NAG Optimizer Based SimCLR | Supervised Approach |