Street address data from Land Information New Zealand (LINZ) was first imported into OpenStreetMap in 2017. Since then, the imported data has become out of date.

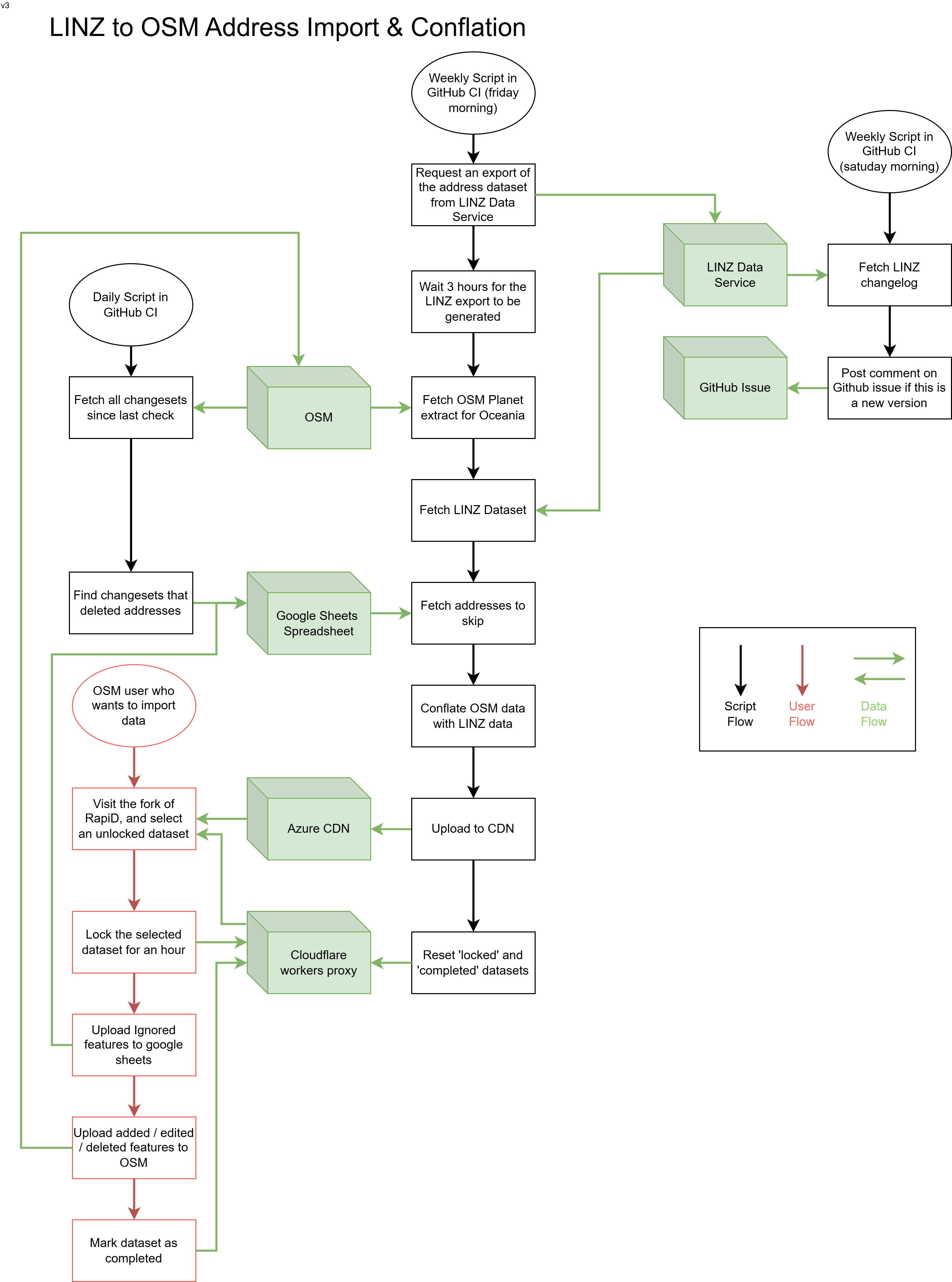

This project aims to update the address data, and set up a system to reguarly update addresses in OSM, by conflating them with the data from LINZ.

🚩 This repository also contains the code used to import topographic and hydrographic data from LINZ. For more info, see the the wiki page.

🌏 For more information, please see the wiki page for this project. The rest of this document contains only technical detail. Continue reading if you wish to contribute to the code

This is modified version of the RapiD editor, which is a modified version of the iD editor.

The source code is in a separate repository (see here). It is available at osm-nz.github.io

If all five status badges at the top of this document are green, then the script is automatically running once a week (on Friday morning NZ time). The results can be viewed and actioned from osm-nz.github.io

If you want to use the code to manually run the process, follow these steps:

- Clone this repository

- Download nodejs v20.11 or later

- Generate an API from https://data.linz.govt.nz/my/api with "Full access to Exports Access"

- Then create a file called

.envin this folder, and addLINZ_API_KEY=XXXXX, whereXXXXXis the token you just generated. - Then repeat this step for https://datafinder.stats.govt.nz/my/api and save it as

STATS_NZ_API_KEY. This is required for the rural urban boundaries

- Then create a file called

- Run

npm install - Run

npm run request-linz-exportto request an export from the LDS, and wait for it to be generated - Run

npm run download-linzto download the requested export - Download the planet file (for just NZ) by running

npm run download-planet. This will create./data/osm.pbf - Start the preprocess script by running

npm run preprocess. This will take ca. 2.5 minutes and create./data/osm.jsonand./data/linz.json - Start the confate script by running

npm run conflate. This will take 30 seconds and create./data/status.json. Some computationally expensive diagnostics are only generated if you runnpm run conflate -- --full, which takes 20 times longer. - Start the action script by running

npm run action. This will take 20 seconds and generate a ton of files in the./outfolder - Upload the contents of the

./outfolder to the CDN by runningnpm run upload. This will take ca. 4 minutes

There are end-to-end tests than run based on a mock planet file and a mock linz CSV file.

To start the test, run npm test. If it changes the contents of the snapshot folder, commit those changes.