-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Add initial Grad-CAM implementation and other initial files

Add requirements.txt for faster environment setup. Fixes on top of https://github.com/jacobgil/keras-grad-cam (as of today, July 12th 2021): - jacobgil/keras-grad-cam#17 (comment) - jacobgil/keras-grad-cam#21 (comment)

- Loading branch information

1 parent

826447b

commit 2a7a7cb

Showing

14 changed files

with

258 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,9 @@ | ||

| *.DS_Store | ||

| *.egg-info | ||

| __pycache__ | ||

| docs/build | ||

| .coverage | ||

| htmlcov | ||

| .docker | ||

| .idea/ | ||

| covidnet/models |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,3 @@ | ||

| { | ||

| "python.formatting.provider": "yapf" | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,8 @@ | ||

| pl-grad-cam | ||

| ================================ | ||

|

|

||

| .. contents:: Table of Contents | ||

|

|

||

| Acknowledgement | ||

| --------------- | ||

| Jacob Gildenblat (jacobgil) for initial Grad-CAM implementation using keras: https://github.com/jacobgil/keras-grad-cam |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,21 @@ | ||

| MIT License | ||

|

|

||

| Copyright (c) 2016 Jacob Gildenblat | ||

|

|

||

| Permission is hereby granted, free of charge, to any person obtaining a copy | ||

| of this software and associated documentation files (the "Software"), to deal | ||

| in the Software without restriction, including without limitation the rights | ||

| to use, copy, modify, merge, publish, distribute, sublicense, and/or sell | ||

| copies of the Software, and to permit persons to whom the Software is | ||

| furnished to do so, subject to the following conditions: | ||

|

|

||

| The above copyright notice and this permission notice shall be included in all | ||

| copies or substantial portions of the Software. | ||

|

|

||

| THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR | ||

| IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, | ||

| FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE | ||

| AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER | ||

| LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, | ||

| OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE | ||

| SOFTWARE. |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,35 @@ | ||

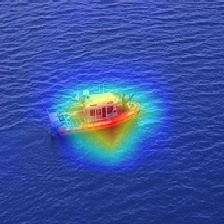

| ## Grad-CAM implementation in Keras ## | ||

|

|

||

| Gradient class activation maps are a visualization technique for deep learning networks. | ||

|

|

||

| See the paper: https://arxiv.org/pdf/1610.02391v1.pdf | ||

|

|

||

| The paper authors torch implementation: https://github.com/ramprs/grad-cam | ||

|

|

||

|

|

||

| This code assumes Tensorflow dimension ordering, and uses the VGG16 network in keras.applications by default (the network weights will be downloaded on first use). | ||

|

|

||

|

|

||

| Usage: `python grad-cam.py <path_to_image>` | ||

|

|

||

|

|

||

| ##### Examples | ||

|

|

||

|   | ||

|

|

||

| Example image from the [original implementation](https://github.com/ramprs/grad-cam): | ||

|

|

||

| 'boxer' (243 or 242 in keras) | ||

|

|

||

|  | ||

|  | ||

|  | ||

|

|

||

| 'tiger cat' (283 or 282 in keras) | ||

|

|

||

|  | ||

|  | ||

|  | ||

|

|

||

|

|

||

|

|

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,148 @@ | ||

| from keras.applications.vgg16 import ( | ||

| VGG16, preprocess_input, decode_predictions) | ||

| from keras.preprocessing import image | ||

| from keras.layers.core import Lambda | ||

| from keras.models import Model | ||

| from tensorflow.python.framework import ops | ||

| import keras.backend as K | ||

| import tensorflow as tf | ||

| import numpy as np | ||

| import keras | ||

| import sys | ||

| import cv2 | ||

|

|

||

| def target_category_loss(x, category_index, nb_classes): | ||

| return tf.multiply(x, K.one_hot([category_index], nb_classes)) | ||

|

|

||

| def target_category_loss_output_shape(input_shape): | ||

| return input_shape | ||

|

|

||

| def normalize(x): | ||

| # utility function to normalize a tensor by its L2 norm | ||

| return x / (K.sqrt(K.mean(K.square(x))) + 1e-5) | ||

|

|

||

| def load_image(path): | ||

| img_path = sys.argv[1] | ||

| img = image.load_img(img_path, target_size=(224, 224)) | ||

| x = image.img_to_array(img) | ||

| x = np.expand_dims(x, axis=0) | ||

| x = preprocess_input(x) | ||

| return x | ||

|

|

||

| def register_gradient(): | ||

| if "GuidedBackProp" not in ops._gradient_registry._registry: | ||

| @ops.RegisterGradient("GuidedBackProp") | ||

| def _GuidedBackProp(op, grad): | ||

| dtype = op.inputs[0].dtype | ||

| return grad * tf.cast(grad > 0., dtype) * \ | ||

| tf.cast(op.inputs[0] > 0., dtype) | ||

|

|

||

| def compile_saliency_function(model, activation_layer='block5_conv3'): | ||

| input_img = model.input | ||

| layer_dict = dict([(layer.name, layer) for layer in model.layers[1:]]) | ||

| layer_output = layer_dict[activation_layer].output | ||

| max_output = K.max(layer_output, axis=3) | ||

| saliency = K.gradients(K.sum(max_output), input_img)[0] | ||

| return K.function([input_img, K.learning_phase()], [saliency]) | ||

|

|

||

| def modify_backprop(model, name): | ||

| g = tf.get_default_graph() | ||

| with g.gradient_override_map({'Relu': name}): | ||

|

|

||

| # get layers that have an activation | ||

| layer_dict = [layer for layer in model.layers[1:] | ||

| if hasattr(layer, 'activation')] | ||

|

|

||

| # replace relu activation | ||

| for layer in layer_dict: | ||

| if layer.activation == keras.activations.relu: | ||

| layer.activation = tf.nn.relu | ||

|

|

||

| # re-instanciate a new model | ||

| new_model = VGG16(weights='imagenet') | ||

| return new_model | ||

|

|

||

| def _compute_gradients(tensor, var_list): | ||

| grads = tf.gradients(tensor, var_list) | ||

| return [grad if grad is not None else tf.zeros_like(var) | ||

| for var, grad in zip(var_list, grads)] | ||

|

|

||

| def deprocess_image(x): | ||

| ''' | ||

| Same normalization as in: | ||

| https://github.com/fchollet/keras/blob/master/examples/conv_filter_visualization.py | ||

| ''' | ||

| if np.ndim(x) > 3: | ||

| x = np.squeeze(x) | ||

| # normalize tensor: center on 0., ensure std is 0.1 | ||

| x -= x.mean() | ||

| x /= (x.std() + 1e-5) | ||

| x *= 0.1 | ||

|

|

||

| # clip to [0, 1] | ||

| x += 0.5 | ||

| x = np.clip(x, 0, 1) | ||

|

|

||

| # convert to RGB array | ||

| x *= 255 | ||

| if K.image_dim_ordering() == 'th': | ||

| x = x.transpose((1, 2, 0)) | ||

| x = np.clip(x, 0, 255).astype('uint8') | ||

| return x | ||

|

|

||

| def grad_cam(input_model, image, category_index, layer_name): | ||

| nb_classes = 1000 | ||

| target_layer = lambda x: target_category_loss(x, category_index, nb_classes) | ||

| x = Lambda(target_layer, output_shape = target_category_loss_output_shape)(input_model.output) | ||

| model = Model(inputs=input_model.input, outputs=x) | ||

| model.summary() | ||

| loss = K.sum(model.output) | ||

| conv_output = [l for l in model.layers if l.name is layer_name][0].output | ||

| grads = normalize(_compute_gradients(loss, [conv_output])[0]) | ||

| gradient_function = K.function([model.input], [conv_output, grads]) | ||

|

|

||

| output, grads_val = gradient_function([image]) | ||

| output, grads_val = output[0, :], grads_val[0, :, :, :] | ||

|

|

||

| weights = np.mean(grads_val, axis = (0, 1)) | ||

| cam = np.ones(output.shape[0 : 2], dtype = np.float32) | ||

|

|

||

| for i, w in enumerate(weights): | ||

| cam += w * output[:, :, i] | ||

|

|

||

| cam = cv2.resize(cam, (224, 224)) | ||

| cam = np.maximum(cam, 0) | ||

| heatmap = cam / np.max(cam) | ||

|

|

||

| #Return to BGR [0..255] from the preprocessed image | ||

| image = image[0, :] | ||

| image -= np.min(image) | ||

| image = np.minimum(image, 255) | ||

|

|

||

| cam = cv2.applyColorMap(np.uint8(255*heatmap), cv2.COLORMAP_JET) | ||

| cam = np.float32(cam) + np.float32(image) | ||

| cam = 255 * cam / np.max(cam) | ||

| return np.uint8(cam), heatmap | ||

|

|

||

| preprocessed_input = load_image(sys.argv[1]) | ||

|

|

||

| model = VGG16(weights='imagenet') | ||

|

|

||

| predictions = model.predict(preprocessed_input) | ||

| print(predictions) | ||

| top_1 = decode_predictions(predictions)[0][0] | ||

| print(decode_predictions(predictions)) | ||

| print('Predicted class:') | ||

| print('%s (%s) with probability %.2f' % (top_1[1], top_1[0], top_1[2])) | ||

|

|

||

| predicted_class = np.argmax(predictions) | ||

| print(f"predicted_class: {predicted_class}") | ||

| cam, heatmap = grad_cam(model, preprocessed_input, predicted_class, "block5_conv3") | ||

| cv2.imwrite("gradcam.jpg", cam) | ||

|

|

||

| register_gradient() | ||

| guided_model = modify_backprop(model, 'GuidedBackProp') | ||

| saliency_fn = compile_saliency_function(guided_model) | ||

| saliency = saliency_fn([preprocessed_input, 0]) | ||

| gradcam = saliency[0] * heatmap[..., np.newaxis] | ||

| cv2.imwrite("guided_gradcam.jpg", deprocess_image(gradcam)) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,34 @@ | ||

| absl-py==0.12.0 | ||

| astor==0.8.1 | ||

| cached-property==1.5.2 | ||

| chrisapp==2.5.3 | ||

| dataclasses==0.8 | ||

| gast==0.4.0 | ||

| grpcio==1.38.0 | ||

| h5py==3.1.0 | ||

| importlib-metadata==4.5.0 | ||

| jedi==0.18.0 | ||

| Keras==2.2.4 | ||

| Keras-Applications==1.0.8 | ||

| Keras-Preprocessing==1.1.2 | ||

| Markdown==3.3.4 | ||

| mock==4.0.3 | ||

| numpy==1.18.4 | ||

| opencv-python==4.2.0.32 | ||

| parso==0.8.2 | ||

| Pillow==8.2.0 | ||

| protobuf==3.17.3 | ||

| pudb==2021.1 | ||

| Pygments==2.9.0 | ||

| PyYAML==5.4.1 | ||

| scipy==1.5.4 | ||

| six==1.16.0 | ||

| tensorboard==1.13.1 | ||

| tensorflow==1.13.2 | ||

| tensorflow-estimator==1.13.0 | ||

| termcolor==1.1.0 | ||

| tk==0.1.0 | ||

| typing-extensions==3.10.0.0 | ||

| urwid==2.1.2 | ||

| Werkzeug==2.0.1 | ||

| zipp==3.4.1 |