SHAMSUL*: Systematic Holistic Analysis to investigate Medical Significance Utilizing Local interpretability methods in deep learning for chest radiography pathology prediction, Mahbub Ul Alam, Jaakko Hollmén, Jón Rúnar Baldvinsson, Rahim Rahmani, https://doi.org/10.5617/nmi.10471

* "The acronym SHAMSUL, derived from a Semitic word meaning "the Sun," serves as a symbolic representation of our heatmap score-based interpretability analysis approach aimed at unveiling the medical significance inherent in the predictions of black box deep learning models."

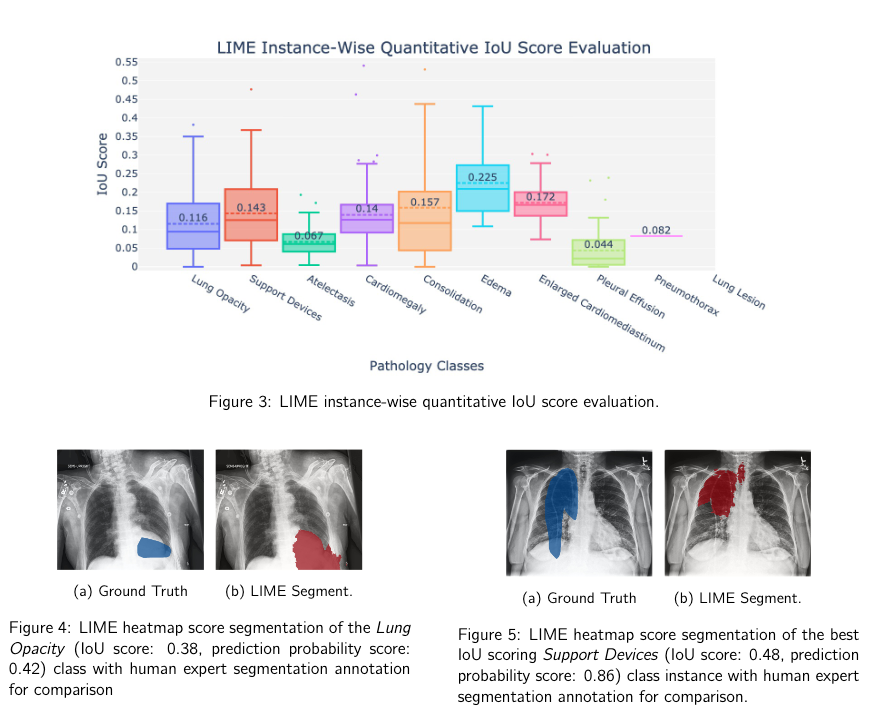

The interpretability of deep neural networks has become a subject of great interest within the medical and healthcare domain. This attention stems from concerns regarding transparency, legal and ethical considerations, and the medical significance of predictions generated by these deep neural networks in clinical decision support systems. To address this matter, our study delves into the application of four well-established interpretability methods: Local Interpretable Model-agnostic Explanations (LIME), Shapley Additive exPlanations (SHAP), Gradient-weighted Class Activation Mapping (Grad-CAM), and Layer-wise Relevance Propagation (LRP). Leveraging the approach of transfer learning with a multi-label-multi-class chest radiography dataset, we aim to interpret predictions pertaining to specific pathology classes. Our analysis encompasses both single-label and multi-label predictions, providing a comprehensive and unbiased assessment through quantitative and qualitative investigations, which are compared against human expert annotation. Notably, Grad-CAM demonstrates the most favorable performance in quantitative evaluation, while the LIME heatmap score segmentation visualization exhibits the highest level of medical significance. Our research underscores both the outcomes and the challenges faced in the holistic approach adopted for assessing these interpretability methods and suggests that a multimodal-based approach, incorporating diverse sources of information beyond chest radiography images, could offer additional insights for enhancing interpretability in the medical domain.

@article{alam2023shamsul,

title={SHAMSUL: Systematic Holistic Analysis to investigate Medical Significance Utilizing Local interpretability methods in deep learning for chest radiography pathology prediction},

author={Ul Alam, Mahbub and Hollmén, Jaakko and Baldvinsson, Jón Rúnar and Rahmani, Rahim},

journal={Nordic Machine Intelligence},

volume={3},

number={1},

pages={27--47},

year={2023},

doi={10.5617/nmi.10471}

}