#Dependancy

#Reading Material

- Sutton's book

- Karpathy's blog

- David Silver's lecture on Policy Gradient

#Current Version

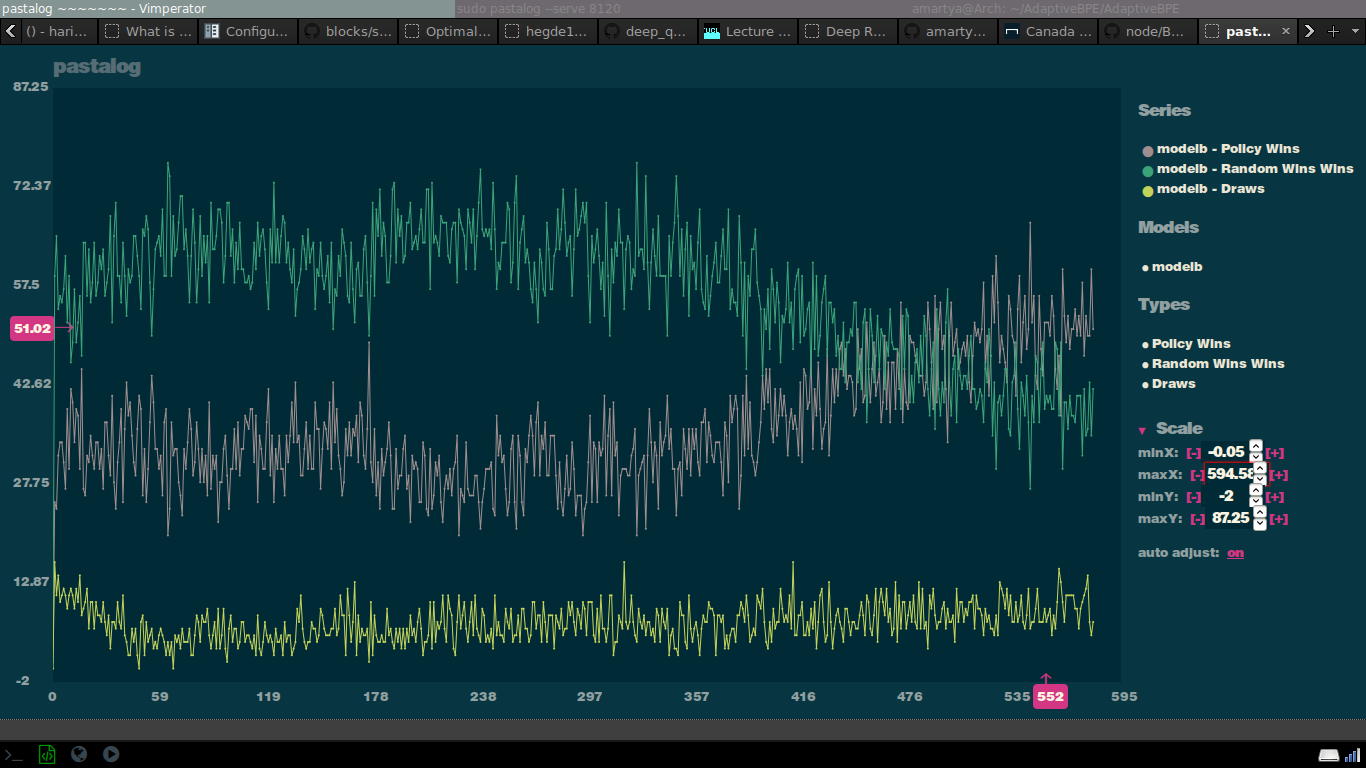

Right now it supports training of an Policy Agent with a Random Agent and then beating it As long as drawing of the game was not being penalized, the Policy agent didnt learn to beat the random agent, however, I then introduced a term to give a negative penaly if the game was drawed.

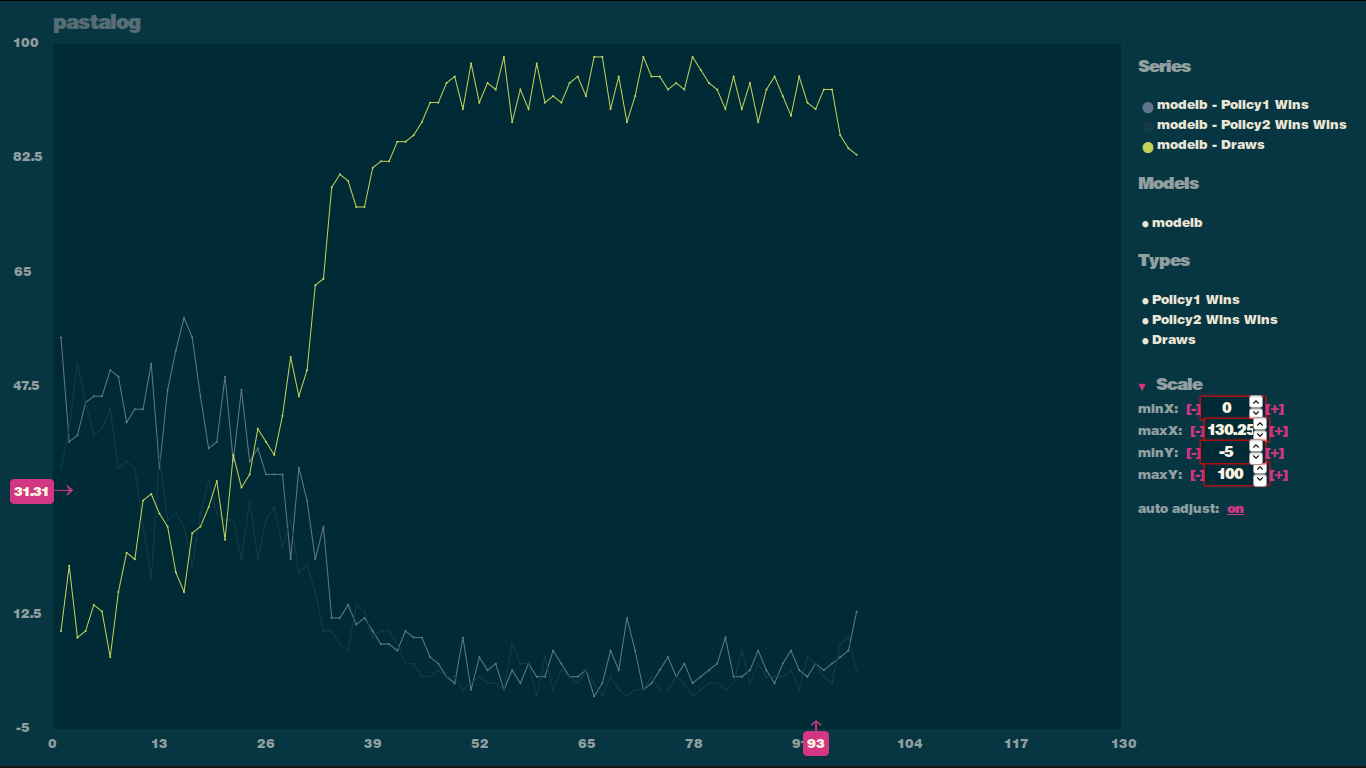

Added them to play against each other On retaining the penalty for drawing the game, the number of draws eventually went to zeros and both the players won equal number of games. So, I decided to see what happens if I remove the penalty from draws and then they decided to draw all the games. Interesting eh ?