This project details the development of an autonomous robot capable of identifying and manipulating specific objects within a given space using advanced computer vision and path planning techniques. Designed as part of the CS5335/4610 course, the robot showcases integration of mechanical design, software programming, sensor technologies, and real-time operational functionalities.

The primary goal of this project is to develop a robotic system that can autonomously identify objects from a predefined list and manipulate them by moving to a designated area. This involves both hardware setup and software development to ensure seamless operation and interaction within dynamic environments.

- Base Vehicle: 2-wheel drive robot controlled by an Arduino Uno.

- Sensing: Xbox Kinect camera for RGB and depth image capture.

- Manipulation Mechanism: Features barriers to stabilize and manipulate the objects during movement.

- Main Control Script: Python script for high-level operations including object detection, path planning, and robot control.

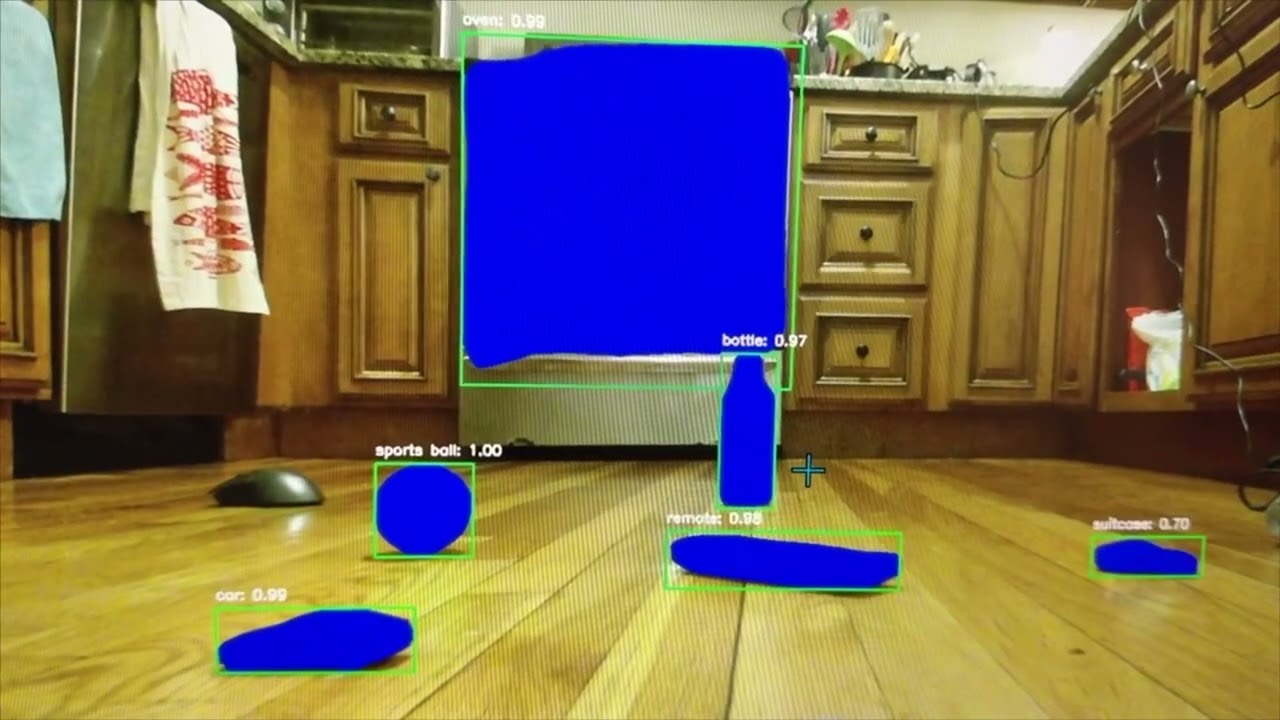

- Object Detection and Vision Processing:

- Vision System: Utilizes RGB and depth images from Kinect.

- Model: Mask R-CNN with a ResNet-50-FPN backbone, fine-tuned on a RGB-D dataset.

- Libraries: PyKinect2 for interfacing with Kinect, PyTorch and TorchVision for model operations, and OpenCV for image processing.

- Algorithm: Rapidly-exploring Random Tree (RRT) algorithm for path planning.

- Execution: Real-time path calculation to maneuver towards and manipulate designated objects.

- Python 3.x

- Arduino IDE

- Libraries: PyKinect2, PyTorch, TorchVision, OpenCV, PyQtGraph

- Clone the repository:

git clone https://github.com/VaradhKaushik/CS5335-4610-Project.git

- Install the required Python libraries:

pip install -r requirements.txt

- Setup Arduino environment according to the schematics provided in the

Arduino_Omegafolder.

- Connect the Kinect and Arduino to your system.

- Run the main script to start the robot:

python main.py

The robot has been demonstrated to identify objects placed randomly within an environment and interact with them effectively. The demonstration includes object detection, navigation, and object manipulation sequences.

Click on the image to watch the project demonstration!!

- Hardware Weight Management: Adjustments made by adding supplementary wheels.

- Software Compatibility: Transitioned from Conda to Python virtual environments due to package conflicts.

Enhancements such as real-time video processing, consistent locomotion improvement, and sophisticated object manipulation mechanisms are considered for future iterations of the project.

- Varadh Kaushik

- Shrey Patel

- Michael Ambrozie

This project is licensed under the MIT License - see the LICENSE.md file for details.