Status update for all. Please populate your README with these:

-

What are you working on?

-

What’s your implementation language?

-

What have you done so far? Did you meet your expectations for this week yet?

-

What do you hope to accomplish by next week? How? (Who’s doing what, if this is a team?)

-

What do you need to move forward?

Also: Looking for a target language? How about JVM?

Also: Get motivated

Don’t forget Hofstadter’s law!

If you’re parsing an arbitrary grammar, may I suggest using a parsing tool:

How to optimize

What to optimize

When to optimize

Later, because: Premature optimization is the root of all evil. — Donald Knuth

Also: Needs more jpeg

Did you know Grace Hopper gave compilers their name? What she was referring to was what is now known as linkers and loaders.

Develop for Android? Use Proguard is like gc for linking. It removes un-referenced objects from classfiles.

For the remaining labs, we’ll update each other on our progress.

During each lab period, each team should have answers to these questions.

-

What did you do? (Nothing isn’t a good answer to that question)

-

Did you achieve what you set out to achieve from the prior week?

-

What are you struggling with or what obstacles do you face?

-

What do you plan to achieve by next week?

-

9am: Starbucks 283 Longwood Ave, Boston, MA 02115

-

11am: J.P. Licks Mission Hill 1618 Tremont St Boston, MA 02120

For best results, please don’t go it alone. Groups of 2-5 work best. Don’t be shy, but we’ll skip the icebreaker. :-)

See June 19 for some lame ideas.

Remember the goal: grammars, pointers and recursion, oh my! :-)

Discuss project ideas, and write them up in README or in your issue tracker.

Yes, everybody does this, not just the team lead. If the project has one central repo, then every push forces a merge, which is a bad thing. Either have separate repos or separate branches to push to.

-

Be sure to add

lawrancejto your collaborators (Go to: Settings → Collaborators), so I can see your repo. -

Clone your repo (

git clonethe SSH URL) -

Go into your repo (

cdyour-repo) -

Add your friend’s remote (

git remote addyour-friends-name their-url) -

Fetch their stuff (

git fetch --all)

For our project, we’ll begin after we’re done with our common labs (they’re done!). You’re welcome to work with as few or as many people as you wish either in this section or others. Start thinking about which of these you’d like to do, or suggest new ideas.

You’re welcome to pursue these traditional project ideas of implementing a small language:

-

Implement some moderately simple language, like say, Cool, LISP, Kaleidoscope or PL/0.

-

Something compiler-related that dovetails nicely with Senior project (e.g., a textual command interface to some game, web or mobile app).

These ideas are also welcome:

-

A parser combinator library to target multiple parsing strategies (derivative, shift-reduce, or recursive descent parsing).

These projects build upon tools like clang (for C/C++), JDT (for Java), Python’s ast module to do work:

-

Automated refactoring tool for existing languages to serve education and large projects

-

Search engine for identifiers and literals in code that makes good recommendations, (e.g., PageRank)

-

Something like Quickcheck, but can generate characterization tests automatically and efficiently.

Here’s some starter code:

Also, esoteric languages are good for laughs:

-

ArnoldC (it’s not a tumor!)

Perform static analysis of Java code.

-

Fork and clone jdt-project.

-

Next, add JAVA_HOME to your environment variables:

Windows: Search for "environment variables" and click 'Edit the system environment variables'. Click 'Environment Variables…' → 'New…'

Variable name: JAVA_HOME

Variable value: C:\Program Files\Java\jdk1.8.0_05 (or whatever version you’re using)

-

Click OK, OK, OK.

Close and reopen Git Bash. If you get the same error, try turning it off and on again

-

Import the project into eclipse.

git clone [email protected]:lawrancej/jdt-project.git cd jdt-project ./gradlew eclipse

'File' → 'Import' → 'General' → 'Existing projects into workspace'

-

Read through the code. Open Main and run it. Nothing will happen. You’ll need to supply the root folder of a Java project to main.

Go to 'Run Configurations' → 'Main' → 'Arguments' → 'Program arguments'. Enter the path to a Java project. Click 'Run'. If you have no other Java projects, you can supply the source of jdt-project to itself. Huzzah!

-

Modify AstVisitor to do one of the following (pick one):

Modify ASTVisitor subclass to do something interesting.

For example:

-

Generate UML class diagram for source code (Show members of classes) See this for insipration

-

Generate a graph of class dependencies (Type uses Types) See this for inspiration

-

Generate a graph of package dependencies (Package uses Packages)

-

Generate a graph of method dependencies (Method uses Methods)

-

Generate a graph of class inheritance / interface implementation

-

Suggest some other graph-related static analysis

Pull from me: git pull upstream master

Take a look at an old midterm for practice purposes: start exams/Midterm1.pdf

Had any difficulty? Let’s discuss.

- Bootstrapping

-

Making a compiler "self-hosting" so that the compiler can be written in the language it compiles. The first C compiler was written in a different language.

- Nondeterminism

-

Having more than one option about which state to visit next.

- Ambiguity

-

Having more than one parse tree for a given input.

SSA is a transformation on code that is a prerequisite for many low-level optimizations, such as dead/duplicate code elimination. Think of it like version control for variables. Each variable gets a new version number when an assignment is made, hence single assignment. If we have multiple branches (i.e., loops or conditionals), we need to merge different variable versions together (denoted by the phi function).

Pseudocode |

SSA form |

Basic block: a = 5 a = a + 10 print a |

SSA Basic block: a_0 = 5 a_1 = a_0 + 10 print a_1 |

Conditional a = 5

if (a < 10) {

a++

} else {

a--

}

a = a * 2

print a

|

SSA conditional a_0 = 5

if (a_0 < 10) {

a_1 = a_0 + 1

} else {

a_2 = a_0 - 1

}

a_3 = phi(a_1,a_2) * 2

print a_3

|

Ah memory management. Regardless of how it happens, it must happen, unless you like leaking memory.

It helps to remember modern computer systems give us three kinds of memory:

-

Static memory

-

Stack memory

-

Heap memory

Static memory is pretty straighforward: it’s a chunk of memory that comes and goes with the program itself, and thus does not grow or shrink over the lifetime of the program. Stack memory is managed using, ahem, a stack. (Who’da thunk it?)

When we think of memory management, we’re almost certainly thinking about the heap: dynamically-allocated memory from the operating system with no pre-set lifespan. Therefore, either the programmer has to specify when the memory is no longer needed, or we can rely on garbage collector to clean up after our mess.

Garbage collection algorithms must know the difference between pointer and an integer. This is why C doesn’t have it. Just kidding, you can do garbage collection in C, but it must be conservative: it can’t make guarantees that it collected all the garbage.

- Strategy

-

Just count how many things point to this object, and when that count drops to 0, free the object.

- Pros

-

-

Simple to implement

-

Reasonably fast

-

Reasonably good (if Python uses it, it must be somewhat good)

-

- Cons

-

-

Now, every object has to have an extra integer just for the reference count.

-

What happens when you got two objects pointing to each other (like in a circular linked list)? Crap! The reference count never drops to zero, that’s what!

-

There’s many variations of tracing (mark-sweep) garbage collection.

- Strategy

-

-

Maintain a root set (a set of objects reachable throughout the program and in the current scope of the program).

-

Traverse (trace) the object graph starting from the root set, looking for garbage (objects unreachable from the root set)

-

- Pros

-

-

This can deal properly with all garbage, including circular linked lists that nobody else references

-

No space overhead of reference counts

-

- Cons

-

-

Naive implementations are slow, and briefly hang programs

-

Not what you’d use when precise timing is important (e.g., launching a rocket, autonomous cars)

-

Essentially, this algorithm is what gave garbage collection its bad reputation

-

- Naive mark sweep

-

Tracing garbage collection that runs when we’re out of memory, and stops the program during garbage collection.

- Concurrent/incremental mark sweep

-

The program still runs during GC (which happens in a separate thread), but marked objects are locked as necessary.

- Generational

-

Most objects on the heap are short-lived: they’re dynamically allocated and freed almost right away. Other objects, fewer in number, live long, productive and happy lives. This form of GC moves reachable objects between two or more memory pools called generations, without touching garbage.

|

Note

|

Good compilers will optimize away as much heap allocation as possible using escape analysis, checking at compile time to see if an object could be referenced outside a function. If not, allocate on the stack. |

Before we begin… Will it optimize? Programming language inventor or serial killer? Also, The programming language network

A map among identifiers, scopes and other information (e.g., its type, where it’s defined).

-

In an interpreter, these can be used for data storage.

-

In a compiler, these are used to generate code.

Type checking ensures that no types are mismatched.

- Strong vs. weak typing

-

How rigidly types are enforced? Strongly-typed languages enforce types rigidly (e.g., Haskell, Rust, Python). Weakly-typed languages allow some implicit mismatched type coercion (e.g., PHP, C).

- Dynamic vs. Static typing

-

When does type-checking happen? Dynamically-typed languages check type mismatches at run-time (e.g., Python, Ruby, Javascript). Statically-typed languages check type mismatches at compile-time (e.g., Java, C++, Haskell), by traversing (and decorating) the AST.

How to get this wrong: Useing you’re types good

- Globals

-

Memory that comes preallocated with the program (i.e., global constants or variables, the

dataarea in assembly). - Stack

-

Memory allocated on the stack frame (i.e., local variables in a function). Deallocation happens on function exit.

- Heap

-

Dynamically-allocated memory (i.e., memory allocated with

newormalloc). Deallocation happens either manually withdeleteorfree, or with a garbage collector. Rust tracks ownership in the type system, allowing the type checker to determine where to place deallocation code at compile time.

Optimize your compiler and interpreter developed in Lab 3.

-

Modify CommandNode so that it includes a counter (presumably an int or the like).

-

Modify the parser a bit so that it only emits a command node after it has encountered a full run of the same command. (e.g., ----- becomes CommandNode(\'-', 5))

-

Modify the interpreter and compiler accordingly.

In short: do an optimization that performs run-length encoding on Brainfuck code.

Then, optimize away certain loops (e.g., [-] or [+]) with a CommandNode to assign zero to the current memory location.

-

Modify

Commandto include another command type:ZERO -

Modify the

CommandNodeconstructor -

Either traverse through the tree with an Optimizer visitor to do replacements, preprocess the input to replace

[-]or[+]with new node types, or in the recursive call toparse, check theLoopthat we get and emit the properCommandNode

Use peek to check when to add a command node to the current container.

You can tell the optimizer is working if the code your compiler generates includes numeric literals, and the printer and interpreter still work.

Test out your old brainfuck interpreter on src/99bottles.bf and compare it with your optimized brainfuck interpreter. Is it faster?

Lab 3 has a new test program, echo.bf that just prints out what you type when run.

rot13.bf may not actually work as advertised, derp.

Let’s talk about quines. And quine relays.

I added quine.bf to test lab 3 using the is-lab-2-done.sh script because I’m that lazy.

git commit -am "WIP" # Commit your stuff if you need to git fetch upstream git merge upstream/lab3 git mergetool # if you see a CONFLICT

-

Copypasta the

Printervisitor insrc/brainfuck.cpp. -

Rename it to

Compiler. -

Instead of printing out Brainfuck code, print out equivalent code for a different language. For languages that need it (e.g., Java), pick a name for your program class (e.g.,

Default).

For example, in Java:

./brainfuck.exe echo.bf > Default.java # Translate brainfuck to java javac Default.java # Compile translated Java code java Default # Run translated Java bytecode (it should do what echo.bf does)

Done!

For example, to C:

git fetch upstream git merge upstream/brainfuck2c git mergetool cd src g++ brainfuck.cpp -o brainfuck.exe ./brainfuck.exe echo.bf > echo.c # translate brainfuck to C gcc echo.c -o myecho.exe myecho.exe # The compiled executable form of echo.bf

|

Note

|

Read Read through chapter 5. |

|

Note

|

In Lab 3, use cin.get(mumble) to read in a char, cin >> mumble ignores spaces.

|

A parser generator is a tool that takes a grammar specification in a file, and produces parse code.

There are many of them. Each has severe limitations. Since these require a grammar spec, you need to understand the grammar’s grammar.

Here’s one for Java. ANTLR

There are many of them.

The parser code isn’t a separate tool, it’s a library you embed in your program. So, you specify a grammar in your code, and let the library do the parse for you.

Examples: Spirit, Parsec

Go ahead and fetch and merge from me (don’t forget to commit your work first):

cd ~/COMP603-2015 git fetch upstream # Unable to merge? Stage and commit your changes git merge upstream/master git merge upstream/lab3 # Have a CONFLICT? git mergetool

Do you have Visual Studio or Code Blocks or XCode installed?

The starter code, src/brainfuck.cpp, is in C++.

Pull from upstream and study in-class/AST.java. Play code golf.

See updated lab description and hints below.

Also, your favorite language sucks, and here’s why.

Go ahead and pull from me:

cd ~/COMP603-2015 git pull upstream master

Do you have Visual Studio or Code Blocks or XCode installed?

The starter code, src/brainfuck.cpp, is in C++.

Modify src/brainfuck.cpp to parse Brainfuck using recursive descent.

Brainfuck’s LL(1) grammar is:

Program -> Sequence Sequence -> Command Sequence Sequence -> Loop Sequence Sequence -> any other character, ignore (treat as a comment) Sequence -> "" (empty string) Command -> '+' | '-' | '<' | '>' | ',' | '.' Loop -> '[' Sequence ']'

Brainfuck in EBNF is:

Program -> Sequence Sequence -> ( Command | Loop | Comment ) * Command -> '+' | '-' | '<' | '>' | ',' | '.' Loop -> '[' Sequence ']' Comment -> any character other than '+' | '-' | '<' | '>' | ',' | '.' | '[' | ']'

The parser will probably be no longer than 20-30 lines; the solution is shorter than the problem statement.

To read characters in a loop, while(file >> c) { … }

If your `C` is rusty, see the http://www.cplusplus.com/reference/[C Reference].

Write the recursive descent parser using any of these strategies:

-

Write

parserecursively. -

Use mutually recursive functions as done in

in-class/RecursiveDescent.java. For each nonterminal in the grammar, write a function with the name of the nonterminal. Peek at the next character and figure out which production (rule) to apply based on the first and/or follow sets. -

Maintain an explicit stack of nodes inside the existing

parsefunction. -

Use an implicit stack by modifying

Nodeto include a pointer to aparentNode.

|

Note

|

Your parser cannot avoid using recursion or a stack (implicit or explicit). Don’t even. |

You are done if your program builds a tree structure correctly.

You need to place child nodes into the appropriate Container.

This means Program at the top-level, and inside a new Loop in the appropriate spots.

To check your implementation, use the is-lab2-done.sh script, or compare program output with input.

The program traverses the tree your parser built and prints it out with the Printer visitor.

If the program shows any discrepancy between the program output and input, it means your parser formed the tree improperly.

Of course, printing out the input file without forming a tree fools the script, but nobody else.

cd ~/COMP603-2015 cd src g++ -o brainfuck.exe brainfuck.cpp brainfuck.exe helloworld.bf chmod +x is-lab2-done.sh ./is-lab2-done.sh

LR(k) means Left to right, Rightmost derivation, with k tokens of lookahead.

LR(k) grammars are a subset of the context-free grammars, and a proper superset of the LL(k) grammars (the LL(k) grammars are a proper subset of the LR(k) grammars). For a grammar to be LR(k):

-

It must be unambiguous

LR(k) grammars can be parsed using 'shift-reduce'.

Shift-reduce parsing is also known as bottom up parsing, because the parser works from the terminals up to the starting nonterminal. A shift-reduce parser shifts terminals onto a stack, and reduces the stack to a nonterminal when the stack matches the right hand side of a production (rule). Programmers rarely write shift-reduce parsers by hand, and use parser generators or parser combinators instead.

Pull from me.

cd ~/COMP603-2015 git pull upstream master # Windows start responses/may-18.txt # Mac open -e responses/may-18.txt

Open responses/may-18.txt in your local repository.

Modify the file to answer the questions.

git commit -am "I got this." git push origin master

- First set

-

the set of terminals (excluding empty string) that can appear first in any derivation of a nonterminal.

- Follow set

-

the set of terminals (ecluding empty string) that can appear first after derivation of a nonterminal.

LL(k) means parse from Left to right, Leftmost derivation, with at most k tokens of lookahead.

LL(k) grammars are a subset of the context-free grammars. For a grammar to be LL(k):

-

The first and follow sets for each nonterminal must be disjoint

-

It must be unambiguous

-

No left-recursion is allowed

-

No common prefixes on the right hand side are allowed

LL(k) grammars can be parsed using 'recursive descent'.

Recursive descent parsing is also known as top-down parsing, because the parse starts from the starting nonterminal.

Each nonterminal is a function, and the first and follow sets determine which production (rule) to choose.

See in-class/RecursiveDescent.java for an example recursive descent parser.

Challenge: What’s the parse tree for int a = 5; using the C Grammar?

Hint: It’s a declaration.

Do this individually, or in pairs.

|

Note

|

If working in a pair, run ./main.sh from your repo. Log in and click on the added collaborator link.

Then, go to the next page and copy the command line instructions.

|

-

Choose a single compiler implementation to review (suggestions welcome!)

-

Identify which files/functions are responsible for each phase in the compiler source (scan/lex/tokenize, parse, AST, optimization, code generation).

-

What was the most ridiculous thing you found? (funny comments? awful code?)

-

Take notes along the way (if you find something that’s unrelated to a compiler phase, try to infer what it’s doing).

-

Write up your findings in a short document and post to your repository (no more than two pages, please). For example:

git add findings.txt git commit -m "Lab 1 findings." git push origin master

Try to get this done today.

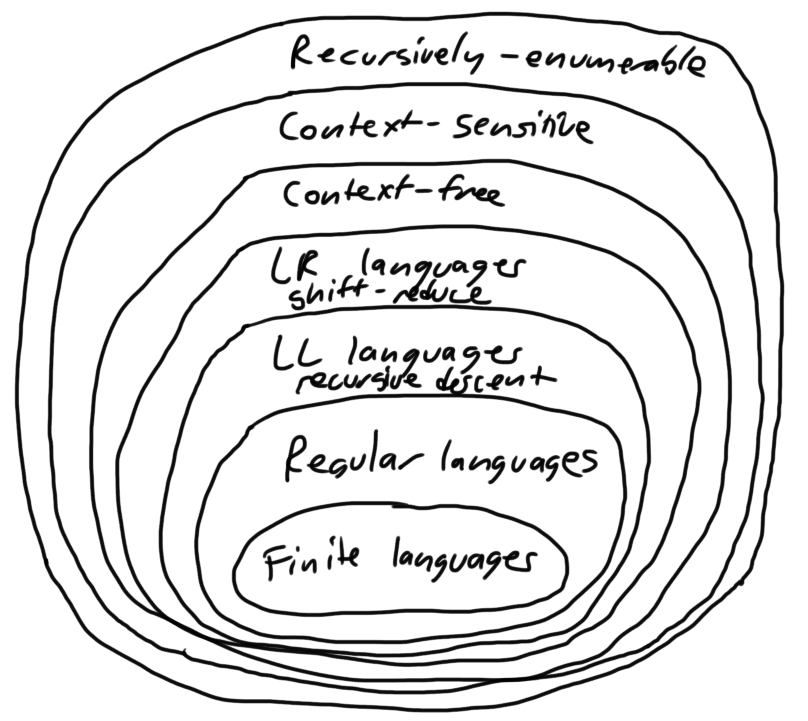

The Chomsky hierarchy is a containment hierarchy of languages. Restrictions placed on grammar production rules (or the underlying automaton) distinguish among language categories.

| Language category | Restrictions on grammar productions | Equivalent automaton |

|---|---|---|

Recursively-enumerable |

None. Sequences of terminals and non-terminals may derive sequences of terminals and nonterminals. |

Finite automaton with infinite tape (Turing machine) |

Context-sensitive |

The same context (terminals or nonterminals) surrounds both sides of the nonterminal on the left, and the derivation on the right. |

Finite automaton with finite tape (Linearly-bounded Turing machine) |

Context-free |

A nonterminal derives sequences of terminals and nonterminals. |

Finite automaton with a stack (Pushdown automaton) |

LR |

Context-free but forbids ambiguity. |

Shift-reduce (bottom up) parser |

LL |

Context-free, the first and follow sets are disjoint, and forbids: ambiguity, left-recursion, and common prefixes. |

Recursive descent (top down) parser |

Regular |

A nonterminal may derive either terminals followed by a single nonterminal, or the empty string. |

Finite automaton |

Finite |

A nonterminal may derive terminals or the empty string. |

Finite automaton without cycles. |

Compilers consist of these 'phases':

| Phase | Description | Input | Output |

|---|---|---|---|

Scan / Tokenize / Lexical analysis |

Split source code into small chunks (tokens) such as identifiers, reserved words, literals, operators, etc. |

Source code |

Token stream |

Parse |

Check the syntax of the source code |

Token stream |

Parse tree |

Translate |

Translate low level syntax into high-level abstract syntax tree |

Parse tree |

Abstract syntax tree, symbol table |

Optimize |

Improve performance or structure |

Abstract syntax tree, symbol table |

Abstract synatx tree, symbol table |

Generate code |

Traverse the AST to generate code. |

Abstract syntax tree, symbol table |

Target code |

The front-end of a compiler consists of scanning and parsing; the back-end consists of translation, optimization and code generation.

Visitors visit (traverse) nodes in a tree to do some computation, without mixing computation into the nodes themselves.

Cheat at today’s crossword puzzle, the easy way with regexes!

cd ~/COMP603-2015 git pull upstream master grep -E "^regex-goes-here$" american-english.txt

A regular expression (regex) defines a language with these primitives and operators.

| Name | Notation | Meaning |

|---|---|---|

Primitives |

Regular expression building block. |

|

Empty Set |

{} |

Reject everything. |

Empty String |

"" |

Match the empty string. |

Symbol |

|

Match a single character. |

Operator |

Make a new regex from existing regexes. |

|

Sequence |

|

Match regex |

Alternation |

|

Match regex |

Kleene Star |

|

Match regex |

The primitives and operators above are complete:

we can define other regular expression operators in terms of them.

For example, a? optionally matches a; a? = a|"".

Another example: a+ matches a 1 or more times; a+ = a*a.

Trivially, finite languages are regular:

finite language: {"hello","cruel","world"}

equivalent regex: hello|cruel|world

Since regular languages can be infinite, they encompass the finite languages.

.* (Matches everything)

Regular languages can’t express everything; for example, they cannot check matching brackets in the general case. Hence, the other classes of languages.

The Chomsky hierarchy is a containment hierarchy of languages. What distinguishes one language category from another is restrictions placed on grammars or the underlying automaton.

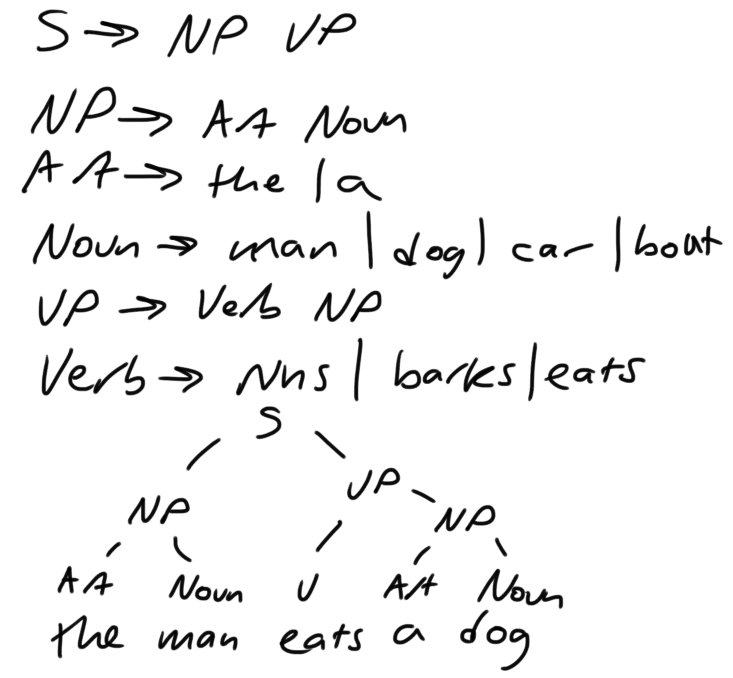

A grammar consists of a finite set of nonterminals (variables), a starting nonterminal, terminals (literals, words or symbols), and productions (rules) that map among terminals and nonterminals. Grammars define languages: they generate the set of strings in the language and test membership of a string in the language.

The example grammar below defines a small subset of English, with an example sentence. The example grammar is context-free because the left side of each arrow is a nonterminal.

|

Note

|

Please read Chapters 1, 2 and 3 (Pages 6-16) or Chapters 1, 2 and 3 of the Crafting a Compiler textbook by next week. If this is overwhelming, read the first sentence of each paragraph, then skip subsequent sentences if it made sense, otherwise read on. See this list for other free books. |

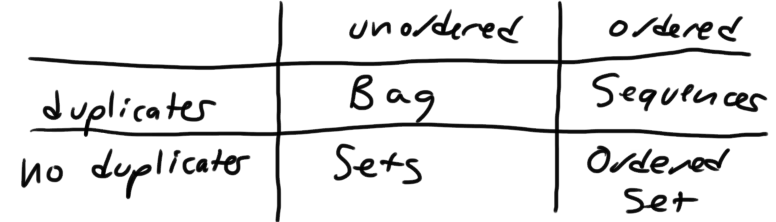

These are all collections.

A set is unordered and has no duplicates (no repeated values).

{ "hello", "world" } == { "world", "hello" }

A bag is unordered and allows duplicates (repeated values).

{ "buffalo", "my", "buffalo" } == { "my", "buffalo", "buffalo" }

A sequence is ordered and allows duplicates.

[ "hello", "cruel", "world" ] != [ "cruel", "world", "hello" ]

An ordered set is ordered and has no duplicates.

To summarize:

English subset

{

"This is a sentence in English.",

"This is another sentence in English."

}

Espanol subseto?

{

"Yo quiero Taco Bell",

"Donde esta el bano?"

}

An alphabet is a set of symbols (e.g., char).

A string is a sequence of symbols chosen from some alphabet.

Languages are (possibly infinite) sets of strings. A grammar constructs a language; regular expressions construct regular languages.

A compiler transforms source language into a target language.

javac, gcc, clang, etc.

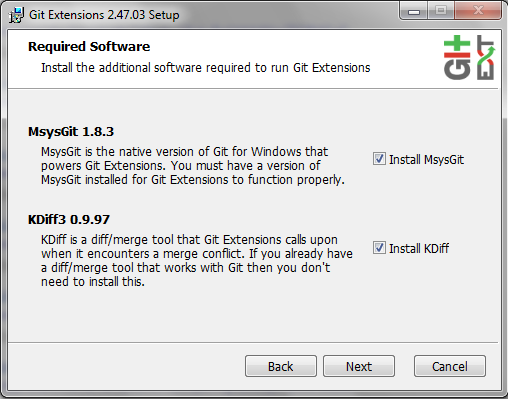

- Windows

-

Note

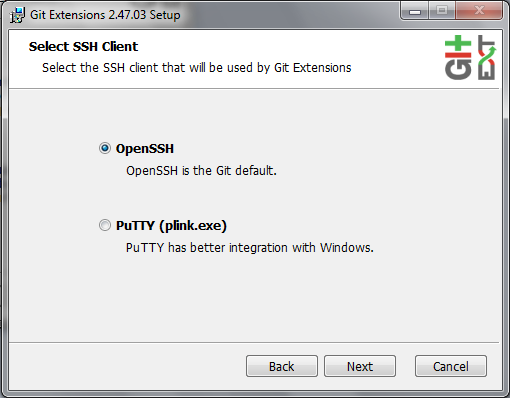

Install MsysGit, Install KDiff, and choose OpenSSH (not PuTTY); otherwise, stick to the default settings. - Mac OS X

-

Install GitX-dev, then Install XCode developer tools which ships with git (recommended) or install git from here.

- Linux

-

Install git using your package manager. QGit, a git frontend may also be available for your distribution.

NoteDon’t forget to use sudo with your package manager.

Starterupper sets up git and project hosting for this class; it is safe to run even if you already have git and SSH keys set up on your machine.

Open Git Bash (Windows) or Terminal (Linux, Mac OS X) and paste in the command below.

Press Insert to paste in Git Bash.

curl https://raw.githubusercontent.com/lawrancej/COMP603-2015/master/main.sh | bash

Open up prequiz.adoc in your favorite text editor (it is in your local git repository).

|

Warning

|

Do not use Notepad or Word. Use a real text editor. Suggestions: Notepad++ (Windows), Atom, or Sublime. |

Then, save your changes and submit your work to your repository.

cd ~/COMP603-2015 # The easy way git gui & # The leet way git add . git commit -m "Finished prequiz" git push -u --all origin