You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Optical ranging is interesting for a number of reasons, not only in this project alone. That's why I propose to use the Pi to perform optical ranging. I propose developing two similar sensing systems.Both of these would be based on the Raspberry Pi's camera and its ability to access its camera using a C library.

Current sources at raspberrypi/userland would probably have to be modified to be accessible from inside our driver rather than calling it as a separate system process and writing the output to stdout or a file (my cursory search of the repository has found only full, stand-alone command-line utilities, e.g. here).

Apparently the Raspberry Pi camera has a focal length of 3.6mm, with a horizontal field of view of 53° and a vertical field of view of 40°. Diagonal is 66°. More information can be found here.

Proposed rangers:

Single point ranging using 1 laser pointer and 1 camera

Parts:

1 Raspberry Pi camera

1 laser pointer

1 rig to hold them pointed in exactly the same direction at a known distance from each other

1 Raspberry Pi to control the individual parts, later perhaps a microcontroller

This device works by taking one still image with the Raspberry Pi camera, then activating the laser, taking a second image, and deactivating the laser. It assumes that the only difference between both pictures is the presence of the laser point.

Once both pictures have been taken, they are cut down into the pixels in which the laser pointer could have been detected. These arrays are subtracted from each other. The point with the largest positive difference between the picture with laser and without is assumed to be where the laser was detected.

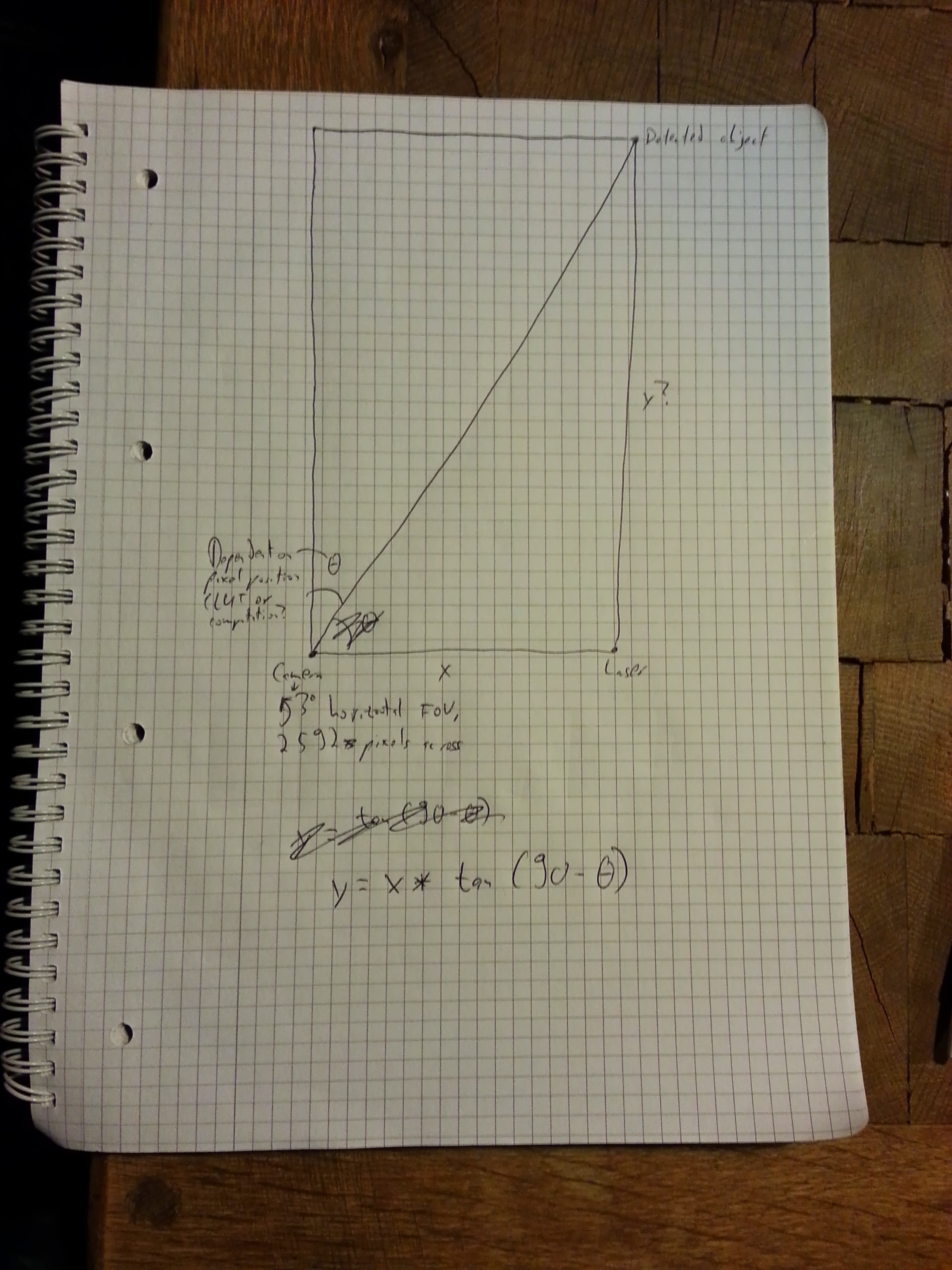

When this pixel has been identified, the distance between the camera rig and the pinged object is computed using parallax equations.

Parts have been ordered, I'm still working on the theoretical part. More on the wiki. It would probably be a good idea to make a stand-alone device using this principle with its own microcontroller that would measure the distance when asked and send it back to the Pi or other calling device.

Simple diagram showing how it would work:

Stereo ranging of an entire scene using two cameras

Parts:

2 Raspberry Pi cameras

1 rig to hold them pointed in exactly the same direction at a known distance from each other

1 T-jack to switch back and forth between the cameras, allowing both to communicate with the Raspberry Pi using its camera port

1 Raspberry Pi to control the individual parts, later perhaps a microcontroller

This works with two Raspberry Pi cameras, calibrated using OpenCV. They are placed in a rig. When pinged by the Pi, both take pictures one after another and return them to the program.

The program saves both images. Simultaneously, it reduces both images' resolution and makes a depth map using stereo matching. The depth map is returned (can be used e.g. in erget/apisElectronica to detect obstacles).

The text was updated successfully, but these errors were encountered:

See RaspiStill.c for how the Pi gets its picture data.

Looks like it's not a big thing. Check this out:

/* Save the next GL framebuffer as the next camera still */intrc=raspitex_capture(&state.raspitex_state, output_file);

if (rc!=0)

vcos_log_error("Failed to capture GL preview");

So this actually passes a file, but of course we could just pass a memory file to raspitex_capture.

I can not use OpenCV on the Pi with the Pi camera, so I'm leaving the Pi alone for the moment and working directly on sparse 3D reconstruction. Right now I'm looking at using either Bundler or VisualSFM. Will be testing them both with my test video.

@freaf87 - Plausible?

Optical ranging is interesting for a number of reasons, not only in this project alone. That's why I propose to use the Pi to perform optical ranging. I propose developing two similar sensing systems.Both of these would be based on the Raspberry Pi's camera and its ability to access its camera using a C library.

Current sources at raspberrypi/userland would probably have to be modified to be accessible from inside our driver rather than calling it as a separate system process and writing the output to stdout or a file (my cursory search of the repository has found only full, stand-alone command-line utilities, e.g. here).

Apparently the Raspberry Pi camera has a focal length of 3.6mm, with a horizontal field of view of 53° and a vertical field of view of 40°. Diagonal is 66°. More information can be found here.

Proposed rangers:

Single point ranging using 1 laser pointer and 1 camera

Parts:

This device works by taking one still image with the Raspberry Pi camera, then activating the laser, taking a second image, and deactivating the laser. It assumes that the only difference between both pictures is the presence of the laser point.

Once both pictures have been taken, they are cut down into the pixels in which the laser pointer could have been detected. These arrays are subtracted from each other. The point with the largest positive difference between the picture with laser and without is assumed to be where the laser was detected.

When this pixel has been identified, the distance between the camera rig and the pinged object is computed using parallax equations.

Parts have been ordered, I'm still working on the theoretical part. More on the wiki. It would probably be a good idea to make a stand-alone device using this principle with its own microcontroller that would measure the distance when asked and send it back to the Pi or other calling device.

Simple diagram showing how it would work:

Stereo ranging of an entire scene using two cameras

Parts:

This works with two Raspberry Pi cameras, calibrated using OpenCV. They are placed in a rig. When pinged by the Pi, both take pictures one after another and return them to the program.

The program saves both images. Simultaneously, it reduces both images' resolution and makes a depth map using stereo matching. The depth map is returned (can be used e.g. in erget/apisElectronica to detect obstacles).

The text was updated successfully, but these errors were encountered: