-

Notifications

You must be signed in to change notification settings - Fork 109

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

What would one use this for ? #9

Comments

|

Some applications:

|

|

Hi Bruno,

Thank you for your answer.

Is there any example code that shows how to do this?

Alef

On 28 Dec 2016, at 20:10, Bruno Ribeiro <[email protected]<mailto:[email protected]>> wrote:

Some applications:

* Head pose estimation (e.g. what direction the person is looking at)

* Extra features for face verification/identification

* Face morphing

* Face replacement/swap

* Detect activity of mouth, eyes, head, etc. (e.g. detect if someone is talking)

* Detect position of individual parts of the face (e.g. position of the eyes)

* Face frontalization

* Detect parts of the face under occlusion (some techniques provides this info)

—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub<#9 (comment)>, or mute the thread<https://github.com/notifications/unsubscribe-auth/AQIi0wPIKi2LSFkKkgHhVZGUvEY6yvybks5rMsIcgaJpZM4LXIc5>.

|

|

Hi @atv2016, yes there are examples here https://github.com/cheind/dest/blob/master/examples/dest_track_video.cpp It's a very basic sample that regresses facial landmarks in a video stream. We've use DEST for our virtual human bust texturing approach that is to be published soon (ISVC16). We used facial landmarks to determine eye and mouth regions in order to choose texture patches appropriately from them. |

|

Hi Christoph,

Thanks for your kind reply.

So how does one know which landmark is he nose, ears etc.

Would you consider doing some work for me (paid of course). I want to create a 3D mesh on the face as you see in the posit/aan models but not sure how to go about that.

I'd like to apply the landmarks to increased face recognition, but how could this be used. Would you store the landmarks and use the distance between each landmark? Would this distance always stay stable?

Thank you!

…Sent from my iPhone

On 29 Dec 2016, at 08:24, Christoph Heindl <[email protected]<mailto:[email protected]>> wrote:

Hi @atv2016<https://github.com/atv2016>, yes there are examples here

https://github.com/cheind/dest/blob/master/examples/dest_track_video.cpp

It's a very basic sample that regresses facial landmarks in a video stream.

We've use DEST for our virtual human bust texturing approach that is to be published soon (ISVC16). We used facial landmarks to determine eye and mouth regions in order to choose texture patches appropriately from them.

-

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub<#9 (comment)>, or mute the thread<https://github.com/notifications/unsubscribe-auth/AQIi04G7chzNkv8WDu1k-YGgY2yaEX9Oks5rM25PgaJpZM4LXIc5>.

|

|

About "which landmark is associated with each part", it depends on the dataset used for training. To improves face recognition, landmarks can be used in the preprocessing (face normalization) or as an feature vector. Notice that if you use as feature, it's important to normalize the landmarks (e.g. rotate landmarks to ensure the eyes are in the same horizontal line, normalize the values between [0, 1], etc.). In a very simple way, you could check if the probe landmarks have a good fit to the template landmarks (e.g. mean squared error between them). This error value can be used as score (the smaller, the better). |

I would recommend Patrik Huber's EOS module for 2D->3D model fitting. This could be used with a |

|

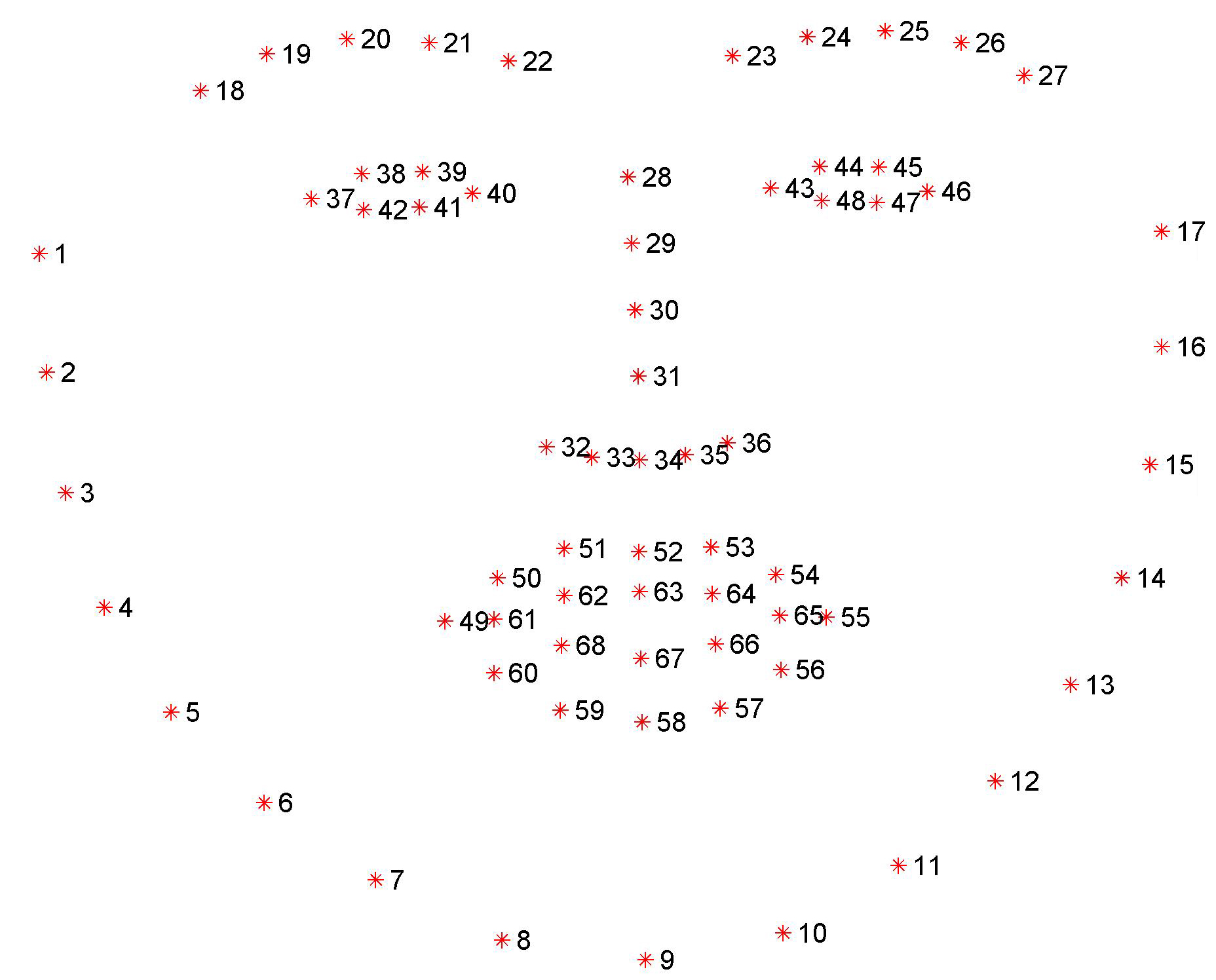

@atv2016 as @brunexgeek mentioned the way in which the landmarks are interpreted depends on the dataset used during training. The one i've been training the releases on is the i-bug 68 point annotation as shown below (mind you the image starts counting datapoints at 1 wheras DEST starts at 0) I wouldn't assume that distances between landmarks stay the same for the simple reason that distances measured in pixels between landmarks change with the distance from object to the camera. You could normalize distance by, say, the divide all landmark edge lengths by the length of the nose. This would give you relative lengths with respect to the nose length and they kinda should stay the same. However, if people turn infront of the camera you would still suffer severe distortions in edge lengths. What you could do (and has been done) is to use an RGBD camera that provides depth and color information. You could then map facial landmarks found in the RGB image to the aligned depth image (i.e 3d points) and measure distances there. That way perspective effects won't hit you. However, I still don't think that this a secure approach to unlock your home door :) If using depth cameras is not an alternative, check check out what @headupinclouds proposed. As far as your paid offer is concerned, I have to refuse. I doubt that I have spare time left to spend on such a project. Maybe you'll find someone else in the field willing to help you out. Good luck, |

What could i use landmarks for ?

The text was updated successfully, but these errors were encountered: