diff --git a/.github/ISSUE_TEMPLATE/----.md b/.github/ISSUE_TEMPLATE/----.md

index bd1427fbc30..3a90404e9de 100644

--- a/.github/ISSUE_TEMPLATE/----.md

+++ b/.github/ISSUE_TEMPLATE/----.md

@@ -4,27 +4,30 @@ about: 遇到问题并寻求帮助

title: ''

labels: help wanted

assignees: ''

-

---

推荐使用英语模板 General question,以便你的问题帮助更多人。

### 首先确认以下内容

+

- 我已经查询了相关的 issue,但没有找到需要的帮助。

- 我已经阅读了相关文档,但仍不知道如何解决。

### 描述你遇到的问题

-[填写这里]

+\[填写这里\]

### 相关信息

+

1. `pip list | grep "mmcv\|mmcls\|^torch"` 命令的输出

-[填写这里]

+ \[填写这里\]

2. 如果你修改了,或者使用了新的配置文件,请在这里写明

+

```python

[填写这里]

```

+

3. 如果你是在训练过程中遇到的问题,请填写完整的训练日志和报错信息

-[填写这里]

+ \[填写这里\]

4. 如果你对 `mmcls` 文件夹下的代码做了其他相关的修改,请在这里写明

-[填写这里]

+ \[填写这里\]

diff --git a/.github/ISSUE_TEMPLATE/---.md b/.github/ISSUE_TEMPLATE/---.md

index c8d2880cd85..fe91547056b 100644

--- a/.github/ISSUE_TEMPLATE/---.md

+++ b/.github/ISSUE_TEMPLATE/---.md

@@ -1,32 +1,34 @@

---

name: 新功能

about: 为项目提一个建议

-title: "[Feature]"

+title: '[Feature]'

labels: enhancement

assignees: ''

-

---

推荐使用英语模板 Feature request,以便你的问题帮助更多人。

### 描述这个功能

-[填写这里]

+\[填写这里\]

### 动机

+

请简要说明以下为什么需要添加这个新功能

例 1. 现在进行 xxx 的时候不方便

例 2. 最近的论文中提出了有一个很有帮助的 xx

-[填写这里]

+\[填写这里\]

### 相关资源

+

是否有相关的官方实现或者第三方实现?这些会很有参考意义。

-[填写这里]

+\[填写这里\]

### 其他相关信息

+

其他和这个功能相关的信息或者截图,请放在这里。

另外如果你愿意参与实现这个功能并提交 PR,请在这里说明,我们将非常欢迎。

-[填写这里]

+\[填写这里\]

diff --git a/.github/ISSUE_TEMPLATE/---bug.md b/.github/ISSUE_TEMPLATE/---bug.md

index 21439b1ae16..a3ec4988c65 100644

--- a/.github/ISSUE_TEMPLATE/---bug.md

+++ b/.github/ISSUE_TEMPLATE/---bug.md

@@ -1,20 +1,21 @@

---

name: 报告 Bug

about: 报告问题以帮助我们提升

-title: "[Bug]"

+title: '[Bug]'

labels: bug

assignees: ''

-

---

推荐使用英语模板 Bug report,以便你的问题帮助更多人。

### 描述 bug

+

简单地描述一下遇到了什么 bug

-[填写这里]

+\[填写这里\]

### 复现流程

+

在命令行中执行的详细操作

```shell

@@ -22,18 +23,22 @@ assignees: ''

```

### 相关信息

+

1. `pip list | grep "mmcv\|mmcls\|^torch"` 命令的输出

-[填写这里]

+ \[填写这里\]

2. 如果你修改了,或者使用了新的配置文件,请在这里写明

+

```python

[填写这里]

```

+

3. 如果你是在训练过程中遇到的问题,请填写完整的训练日志和报错信息

-[填写这里]

+ \[填写这里\]

4. 如果你对 `mmcls` 文件夹下的代码做了其他相关的修改,请在这里写明

-[填写这里]

+ \[填写这里\]

### 附加内容

+

任何其他有关该 bug 的信息、截图等

-[填写这里]

+\[填写这里\]

diff --git a/.github/ISSUE_TEMPLATE/bug_report.md b/.github/ISSUE_TEMPLATE/bug_report.md

index 3d31f203900..c00c1f59600 100644

--- a/.github/ISSUE_TEMPLATE/bug_report.md

+++ b/.github/ISSUE_TEMPLATE/bug_report.md

@@ -1,18 +1,19 @@

---

name: Bug report

about: Create a report to help us improve

-title: "[Bug]"

+title: '[Bug]'

labels: bug

assignees: ''

-

---

### Describe the bug

+

A clear and concise description of what the bug is.

-[here]

+\[here\]

### To Reproduce

+

The command you executed.

```shell

@@ -20,18 +21,22 @@ The command you executed.

```

### Post related information

+

1. The output of `pip list | grep "mmcv\|mmcls\|^torch"`

-[here]

+ \[here\]

2. Your config file if you modified it or created a new one.

+

```python

[here]

```

+

3. Your train log file if you meet the problem during training.

-[here]

+ \[here\]

4. Other code you modified in the `mmcls` folder.

-[here]

+ \[here\]

### Additional context

+

Add any other context about the problem here.

-[here]

+\[here\]

diff --git a/.github/ISSUE_TEMPLATE/feature_request.md b/.github/ISSUE_TEMPLATE/feature_request.md

index f724b9fb6ce..23b7c097b8c 100644

--- a/.github/ISSUE_TEMPLATE/feature_request.md

+++ b/.github/ISSUE_TEMPLATE/feature_request.md

@@ -1,30 +1,32 @@

---

name: Feature request

about: Suggest an idea for this project

-title: "[Feature]"

+title: '[Feature]'

labels: enhancement

assignees: ''

-

---

### Describe the feature

-[here]

+\[here\]

### Motivation

+

A clear and concise description of the motivation of the feature.

-Ex1. It is inconvenient when [....].

-Ex2. There is a recent paper [....], which is very helpful for [....].

+Ex1. It is inconvenient when \[....\].

+Ex2. There is a recent paper \[....\], which is very helpful for \[....\].

-[here]

+\[here\]

### Related resources

+

If there is an official code release or third-party implementation, please also provide the information here, which would be very helpful.

-[here]

+\[here\]

### Additional context

+

Add any other context or screenshots about the feature request here.

If you would like to implement the feature and create a PR, please leave a comment here and that would be much appreciated.

-[here]

+\[here\]

diff --git a/.github/ISSUE_TEMPLATE/general-questions.md b/.github/ISSUE_TEMPLATE/general-questions.md

index 929da065e45..42d5fb2e4c2 100644

--- a/.github/ISSUE_TEMPLATE/general-questions.md

+++ b/.github/ISSUE_TEMPLATE/general-questions.md

@@ -4,25 +4,28 @@ about: 'Ask general questions to get help '

title: ''

labels: help wanted

assignees: ''

-

---

### Checklist

+

- I have searched related issues but cannot get the expected help.

- I have read related documents and don't know what to do.

### Describe the question you meet

-[here]

+\[here\]

### Post related information

+

1. The output of `pip list | grep "mmcv\|mmcls\|^torch"`

-[here]

+ \[here\]

2. Your config file if you modified it or created a new one.

+

```python

[here]

```

+

3. Your train log file if you meet the problem during training.

-[here]

+ \[here\]

4. Other code you modified in the `mmcls` folder.

-[here]

+ \[here\]

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index 82fa87e6380..0d19d5f67bf 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -25,12 +25,15 @@ repos:

args: ["--remove"]

- id: mixed-line-ending

args: ["--fix=lf"]

- - repo: https://github.com/markdownlint/markdownlint

- rev: v0.11.0

- hooks:

- - id: markdownlint

- args: ["-r", "~MD002,~MD013,~MD029,~MD033,~MD034",

- "-t", "allow_different_nesting"]

+ - repo: https://github.com/executablebooks/mdformat

+ rev: 0.7.9

+ hooks:

+ - id: mdformat

+ args: ["--number", "--table-width", "200"]

+ additional_dependencies:

+ - mdformat-openmmlab

+ - mdformat_frontmatter

+ - linkify-it-py

- repo: https://github.com/codespell-project/codespell

rev: v2.1.0

hooks:

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

new file mode 100644

index 00000000000..8a0c63299f0

--- /dev/null

+++ b/CONTRIBUTING.md

@@ -0,0 +1,61 @@

+# Contributing to OpenMMLab

+

+All kinds of contributions are welcome, including but not limited to the following.

+

+- Fix typo or bugs

+- Add documentation or translate the documentation into other languages

+- Add new features and components

+

+## Workflow

+

+1. fork and pull the latest OpenMMLab repository (MMClassification)

+2. checkout a new branch (do not use master branch for PRs)

+3. commit your changes

+4. create a PR

+

+```{note}

+If you plan to add some new features that involve large changes, it is encouraged to open an issue for discussion first.

+```

+

+## Code style

+

+### Python

+

+We adopt [PEP8](https://www.python.org/dev/peps/pep-0008/) as the preferred code style.

+

+We use the following tools for linting and formatting:

+

+- [flake8](https://github.com/PyCQA/flake8): A wrapper around some linter tools.

+- [isort](https://github.com/timothycrosley/isort): A Python utility to sort imports.

+- [yapf](https://github.com/google/yapf): A formatter for Python files.

+- [codespell](https://github.com/codespell-project/codespell): A Python utility to fix common misspellings in text files.

+- [mdformat](https://github.com/executablebooks/mdformat): Mdformat is an opinionated Markdown formatter that can be used to enforce a consistent style in Markdown files.

+- [docformatter](https://github.com/myint/docformatter): A formatter to format docstring.

+

+Style configurations can be found in [setup.cfg](./setup.cfg).

+

+We use [pre-commit hook](https://pre-commit.com/) that checks and formats for `flake8`, `yapf`, `isort`, `trailing whitespaces`, `markdown files`,

+fixes `end-of-files`, `double-quoted-strings`, `python-encoding-pragma`, `mixed-line-ending`, sorts `requirments.txt` automatically on every commit.

+The config for a pre-commit hook is stored in [.pre-commit-config](https://github.com/open-mmlab/mmclassification/blob/master/.pre-commit-config.yaml).

+

+After you clone the repository, you will need to install initialize pre-commit hook.

+

+```shell

+pip install -U pre-commit

+```

+

+From the repository folder

+

+```shell

+pre-commit install

+```

+

+After this on every commit check code linters and formatter will be enforced.

+

+```{important}

+Before you create a PR, make sure that your code lints and is formatted by yapf.

+```

+

+### C++ and CUDA

+

+We follow the [Google C++ Style Guide](https://google.github.io/styleguide/cppguide.html).

diff --git a/README.md b/README.md

index f593d2af1a1..f47fc145c31 100644

--- a/README.md

+++ b/README.md

@@ -1,6 +1,6 @@

-

+

OpenMMLab website

@@ -19,20 +19,19 @@

+[](https://pypi.org/project/mmcls)

+[](https://mmclassification.readthedocs.io/en/latest/)

+[](https://github.com/open-mmlab/mmclassification/actions)

+[](https://codecov.io/gh/open-mmlab/mmclassification)

+[](https://github.com/open-mmlab/mmclassification/blob/master/LICENSE)

+[](https://github.com/open-mmlab/mmclassification/issues)

+[](https://github.com/open-mmlab/mmclassification/issues)

- [](https://pypi.org/project/mmcls)

- [](https://mmclassification.readthedocs.io/en/latest/)

- [](https://github.com/open-mmlab/mmclassification/actions)

- [](https://codecov.io/gh/open-mmlab/mmclassification)

- [](https://github.com/open-mmlab/mmclassification/blob/master/LICENSE)

- [](https://github.com/open-mmlab/mmclassification/issues)

- [](https://github.com/open-mmlab/mmclassification/issues)

-

- [📘 Documentation](https://mmclassification.readthedocs.io/en/latest/) |

- [🛠️ Installation](https://mmclassification.readthedocs.io/en/latest/install.html) |

- [👀 Model Zoo](https://mmclassification.readthedocs.io/en/latest/model_zoo.html) |

- [🆕 Update News](https://mmclassification.readthedocs.io/en/latest/changelog.html) |

- [🤔 Reporting Issues](https://github.com/open-mmlab/mmclassification/issues/new/choose)

+[📘 Documentation](https://mmclassification.readthedocs.io/en/latest/) |

+[🛠️ Installation](https://mmclassification.readthedocs.io/en/latest/install.html) |

+[👀 Model Zoo](https://mmclassification.readthedocs.io/en/latest/model_zoo.html) |

+[🆕 Update News](https://mmclassification.readthedocs.io/en/latest/changelog.html) |

+[🤔 Reporting Issues](https://github.com/open-mmlab/mmclassification/issues/new/choose)

-

+

OpenMMLab 官网

@@ -19,19 +19,19 @@

- [](https://pypi.org/project/mmcls)

- [](https://mmclassification.readthedocs.io/zh_CN/latest/)

- [](https://github.com/open-mmlab/mmclassification/actions)

- [](https://codecov.io/gh/open-mmlab/mmclassification)

- [](https://github.com/open-mmlab/mmclassification/blob/master/LICENSE)

- [](https://github.com/open-mmlab/mmclassification/issues)

- [](https://github.com/open-mmlab/mmclassification/issues)

+[](https://pypi.org/project/mmcls)

+[](https://mmclassification.readthedocs.io/zh_CN/latest/)

+[](https://github.com/open-mmlab/mmclassification/actions)

+[](https://codecov.io/gh/open-mmlab/mmclassification)

+[](https://github.com/open-mmlab/mmclassification/blob/master/LICENSE)

+[](https://github.com/open-mmlab/mmclassification/issues)

+[](https://github.com/open-mmlab/mmclassification/issues)

- [📘 中文文档](https://mmclassification.readthedocs.io/zh_CN/latest/) |

- [🛠️ 安装教程](https://mmclassification.readthedocs.io/zh_CN/latest/install.html) |

- [👀 模型库](https://mmclassification.readthedocs.io/zh_CN/latest/model_zoo.html) |

- [🆕 更新日志](https://mmclassification.readthedocs.io/en/latest/changelog.html) |

- [🤔 报告问题](https://github.com/open-mmlab/mmclassification/issues/new/choose)

+[📘 中文文档](https://mmclassification.readthedocs.io/zh_CN/latest/) |

+[🛠️ 安装教程](https://mmclassification.readthedocs.io/zh_CN/latest/install.html) |

+[👀 模型库](https://mmclassification.readthedocs.io/zh_CN/latest/model_zoo.html) |

+[🆕 更新日志](https://mmclassification.readthedocs.io/en/latest/changelog.html) |

+[🤔 报告问题](https://github.com/open-mmlab/mmclassification/issues/new/choose)

@@ -17,15 +20,13 @@ Although convolutional networks have been the dominant architecture for vision t

### ImageNet-1k

-| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|

-| ConvMixer-768/32\* | 21.11 | 19.62 | 80.16 | 95.08 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convmixer/convmixer-768-32_10xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convmixer/convmixer-768-32_3rdparty_10xb64_in1k_20220323-bca1f7b8.pth) |

-| ConvMixer-1024/20\* | 24.38 | 5.55 | 76.94 | 93.36 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convmixer/convmixer-1024-20_10xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convmixer/convmixer-1024-20_3rdparty_10xb64_in1k_20220323-48f8aeba.pth) |

-| ConvMixer-1536/20\* | 51.63 | 48.71 | 81.37 | 95.61 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convmixer/convmixer-1536-20_10xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convmixer/convmixer-1536_20_3rdparty_10xb64_in1k_20220323-ea5786f3.pth) |

-

-

-*Models with \* are converted from the [official repo](https://github.com/locuslab/convmixer). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

+| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :-----------------: | :-------: | :------: | :-------: | :-------: | :----------------------------------------------------------------------: | :------------------------------------------------------------------------: |

+| ConvMixer-768/32\* | 21.11 | 19.62 | 80.16 | 95.08 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convmixer/convmixer-768-32_10xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convmixer/convmixer-768-32_3rdparty_10xb64_in1k_20220323-bca1f7b8.pth) |

+| ConvMixer-1024/20\* | 24.38 | 5.55 | 76.94 | 93.36 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convmixer/convmixer-1024-20_10xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convmixer/convmixer-1024-20_3rdparty_10xb64_in1k_20220323-48f8aeba.pth) |

+| ConvMixer-1536/20\* | 51.63 | 48.71 | 81.37 | 95.61 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convmixer/convmixer-1536-20_10xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convmixer/convmixer-1536_20_3rdparty_10xb64_in1k_20220323-ea5786f3.pth) |

+*Models with * are converted from the [official repo](https://github.com/locuslab/convmixer). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

## Citation

diff --git a/configs/convnext/README.md b/configs/convnext/README.md

index 357e8de40b0..7db81366aa4 100644

--- a/configs/convnext/README.md

+++ b/configs/convnext/README.md

@@ -1,14 +1,17 @@

# ConvNeXt

> [A ConvNet for the 2020s](https://arxiv.org/abs/2201.03545v1)

+

## Abstract

+

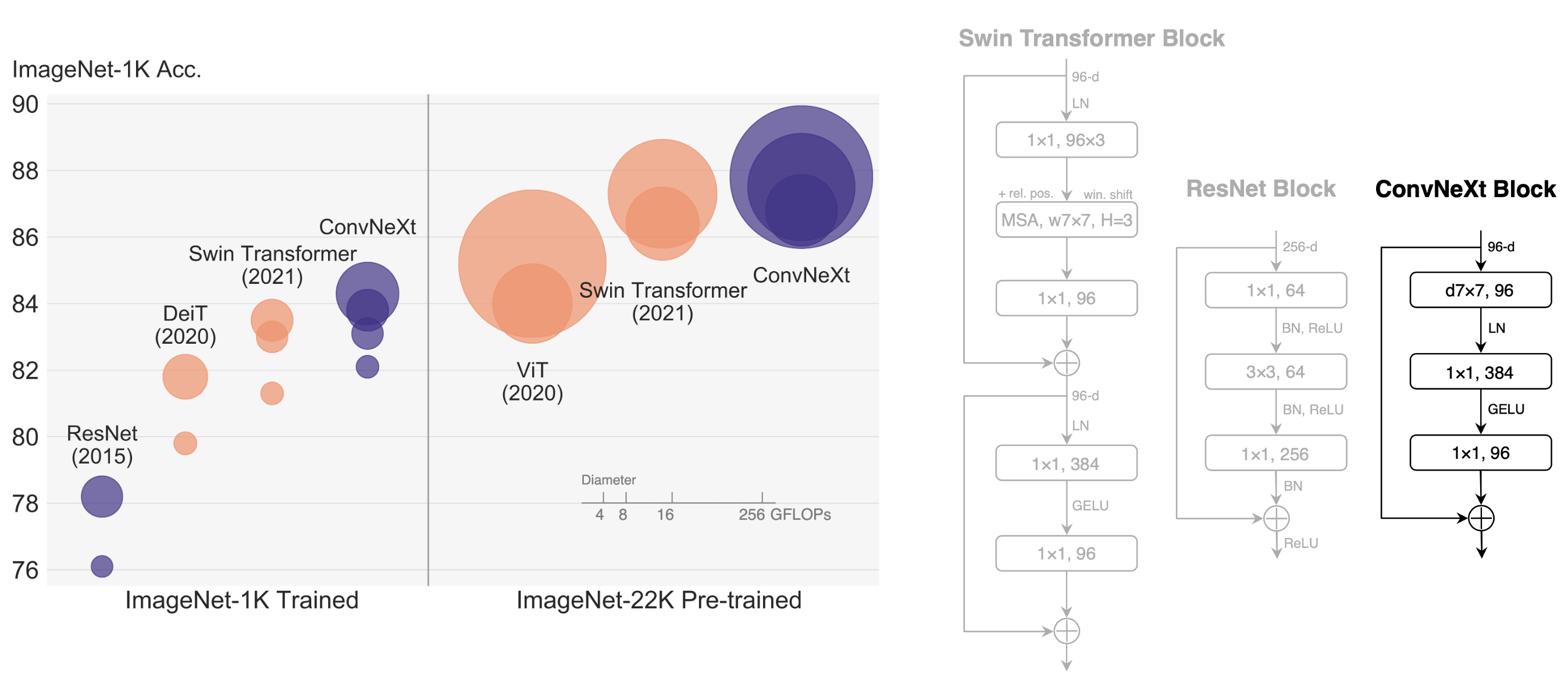

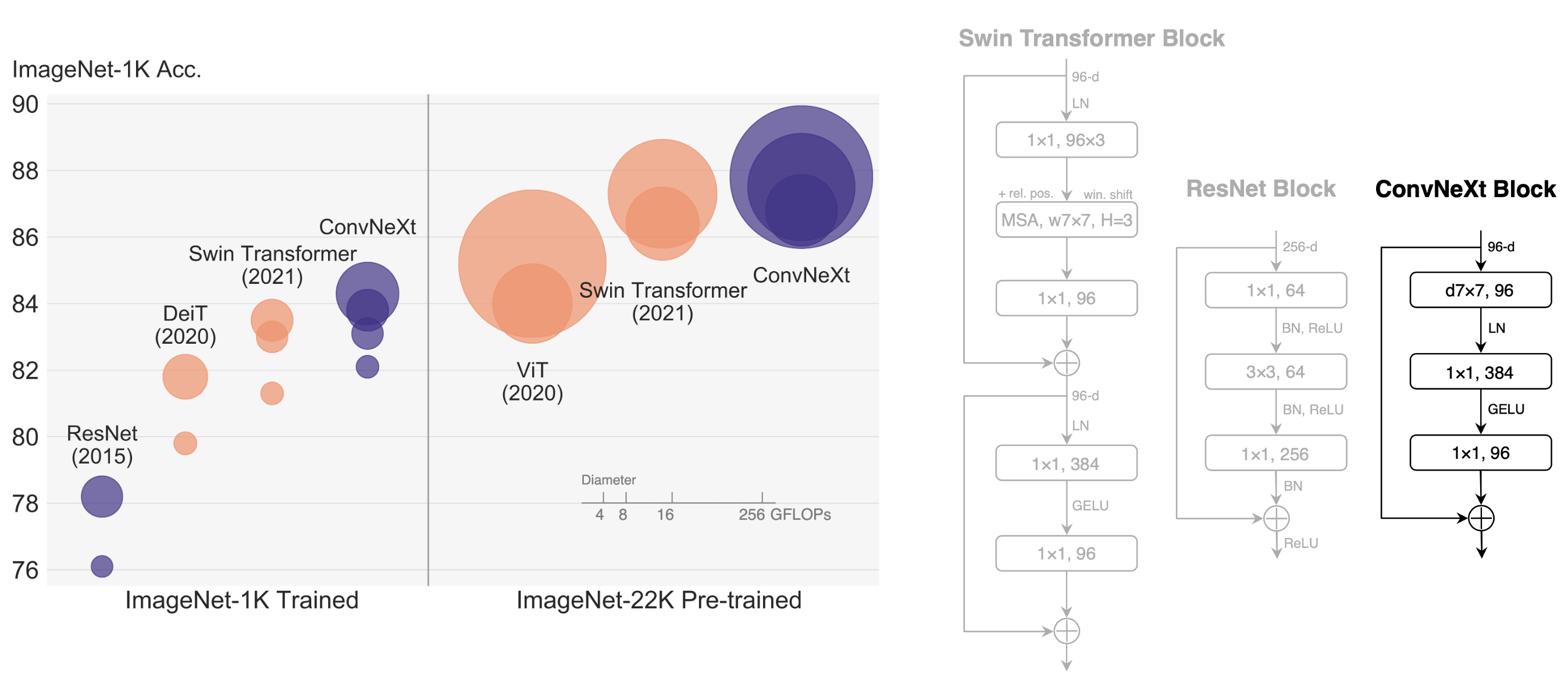

The "Roaring 20s" of visual recognition began with the introduction of Vision Transformers (ViTs), which quickly superseded ConvNets as the state-of-the-art image classification model. A vanilla ViT, on the other hand, faces difficulties when applied to general computer vision tasks such as object detection and semantic segmentation. It is the hierarchical Transformers (e.g., Swin Transformers) that reintroduced several ConvNet priors, making Transformers practically viable as a generic vision backbone and demonstrating remarkable performance on a wide variety of vision tasks. However, the effectiveness of such hybrid approaches is still largely credited to the intrinsic superiority of Transformers, rather than the inherent inductive biases of convolutions. In this work, we reexamine the design spaces and test the limits of what a pure ConvNet can achieve. We gradually "modernize" a standard ResNet toward the design of a vision Transformer, and discover several key components that contribute to the performance difference along the way. The outcome of this exploration is a family of pure ConvNet models dubbed ConvNeXt. Constructed entirely from standard ConvNet modules, ConvNeXts compete favorably with Transformers in terms of accuracy and scalability, achieving 87.8% ImageNet top-1 accuracy and outperforming Swin Transformers on COCO detection and ADE20K segmentation, while maintaining the simplicity and efficiency of standard ConvNets.

+

@@ -17,32 +20,32 @@ The "Roaring 20s" of visual recognition began with the introduction of Vision Tr

### ImageNet-1k

-| Model | Pretrain | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------:|:------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|

-| ConvNeXt-T\* | From scratch | 28.59 | 4.46 | 82.05 | 95.86 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-tiny_32xb128_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-tiny_3rdparty_32xb128_in1k_20220124-18abde00.pth) |

-| ConvNeXt-S\* | From scratch | 50.22 | 8.69 | 83.13 | 96.44 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-small_32xb128_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-small_3rdparty_32xb128_in1k_20220124-d39b5192.pth) |

-| ConvNeXt-B\* | From scratch | 88.59 | 15.36 | 83.85 | 96.74 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-base_32xb128_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-base_3rdparty_32xb128_in1k_20220124-d0915162.pth) |

-| ConvNeXt-B\* | ImageNet-21k | 88.59 | 15.36 | 85.81 | 97.86 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-base_32xb128_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-base_in21k-pre-3rdparty_32xb128_in1k_20220124-eb2d6ada.pth) |

-| ConvNeXt-L\* | From scratch | 197.77 | 34.37 | 84.30 | 96.89 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-large_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-large_3rdparty_64xb64_in1k_20220124-f8a0ded0.pth) |

-| ConvNeXt-L\* | ImageNet-21k | 197.77 | 34.37 | 86.61 | 98.04 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-large_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-large_in21k-pre-3rdparty_64xb64_in1k_20220124-2412403d.pth) |

-| ConvNeXt-XL\* | ImageNet-21k | 350.20 | 60.93 | 86.97 | 98.20 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-xlarge_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-xlarge_in21k-pre-3rdparty_64xb64_in1k_20220124-76b6863d.pth) |

+| Model | Pretrain | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :-----------: | :----------: | :-------: | :------: | :-------: | :-------: | :-------------------------------------------------------------------: | :---------------------------------------------------------------------: |

+| ConvNeXt-T\* | From scratch | 28.59 | 4.46 | 82.05 | 95.86 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-tiny_32xb128_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-tiny_3rdparty_32xb128_in1k_20220124-18abde00.pth) |

+| ConvNeXt-S\* | From scratch | 50.22 | 8.69 | 83.13 | 96.44 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-small_32xb128_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-small_3rdparty_32xb128_in1k_20220124-d39b5192.pth) |

+| ConvNeXt-B\* | From scratch | 88.59 | 15.36 | 83.85 | 96.74 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-base_32xb128_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-base_3rdparty_32xb128_in1k_20220124-d0915162.pth) |

+| ConvNeXt-B\* | ImageNet-21k | 88.59 | 15.36 | 85.81 | 97.86 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-base_32xb128_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-base_in21k-pre-3rdparty_32xb128_in1k_20220124-eb2d6ada.pth) |

+| ConvNeXt-L\* | From scratch | 197.77 | 34.37 | 84.30 | 96.89 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-large_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-large_3rdparty_64xb64_in1k_20220124-f8a0ded0.pth) |

+| ConvNeXt-L\* | ImageNet-21k | 197.77 | 34.37 | 86.61 | 98.04 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-large_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-large_in21k-pre-3rdparty_64xb64_in1k_20220124-2412403d.pth) |

+| ConvNeXt-XL\* | ImageNet-21k | 350.20 | 60.93 | 86.97 | 98.20 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/convnext/convnext-xlarge_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-xlarge_in21k-pre-3rdparty_64xb64_in1k_20220124-76b6863d.pth) |

-*Models with \* are converted from the [official repo](https://github.com/facebookresearch/ConvNeXt). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

+*Models with * are converted from the [official repo](https://github.com/facebookresearch/ConvNeXt). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

### Pre-trained Models

The pre-trained models on ImageNet-1k or ImageNet-21k are used to fine-tune on the downstream tasks.

-| Model | Training Data | Params(M) | Flops(G) | Download |

-|:--------------:|:-------------:|:---------:|:--------:|:--------:|

-| ConvNeXt-T\* | ImageNet-1k | 28.59 | 4.46 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-tiny_3rdparty_32xb128-noema_in1k_20220222-2908964a.pth) |

-| ConvNeXt-S\* | ImageNet-1k | 50.22 | 8.69 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-small_3rdparty_32xb128-noema_in1k_20220222-fa001ca5.pth) |

-| ConvNeXt-B\* | ImageNet-1k | 88.59 | 15.36 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-base_3rdparty_32xb128-noema_in1k_20220222-dba4f95f.pth) |

-| ConvNeXt-B\* | ImageNet-21k | 88.59 | 15.36 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-base_3rdparty_in21k_20220124-13b83eec.pth) |

-| ConvNeXt-L\* | ImageNet-21k | 197.77 | 34.37 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-large_3rdparty_in21k_20220124-41b5a79f.pth) |

-| ConvNeXt-XL\* | ImageNet-21k | 350.20 | 60.93 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-xlarge_3rdparty_in21k_20220124-f909bad7.pth) |

+| Model | Training Data | Params(M) | Flops(G) | Download |

+| :-----------: | :-----------: | :-------: | :------: | :-----------------------------------------------------------------------------------------------------------------------------------: |

+| ConvNeXt-T\* | ImageNet-1k | 28.59 | 4.46 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-tiny_3rdparty_32xb128-noema_in1k_20220222-2908964a.pth) |

+| ConvNeXt-S\* | ImageNet-1k | 50.22 | 8.69 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-small_3rdparty_32xb128-noema_in1k_20220222-fa001ca5.pth) |

+| ConvNeXt-B\* | ImageNet-1k | 88.59 | 15.36 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-base_3rdparty_32xb128-noema_in1k_20220222-dba4f95f.pth) |

+| ConvNeXt-B\* | ImageNet-21k | 88.59 | 15.36 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-base_3rdparty_in21k_20220124-13b83eec.pth) |

+| ConvNeXt-L\* | ImageNet-21k | 197.77 | 34.37 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-large_3rdparty_in21k_20220124-41b5a79f.pth) |

+| ConvNeXt-XL\* | ImageNet-21k | 350.20 | 60.93 | [model](https://download.openmmlab.com/mmclassification/v0/convnext/convnext-xlarge_3rdparty_in21k_20220124-f909bad7.pth) |

-*Models with \* are converted from the [official repo](https://github.com/facebookresearch/ConvNeXt).*

+*Models with * are converted from the [official repo](https://github.com/facebookresearch/ConvNeXt).*

## Citation

diff --git a/configs/cspnet/README.md b/configs/cspnet/README.md

index c2507e92205..10eb9d0d505 100644

--- a/configs/cspnet/README.md

+++ b/configs/cspnet/README.md

@@ -1,14 +1,17 @@

# CSPNet

> [CSPNet: A New Backbone that can Enhance Learning Capability of CNN](https://arxiv.org/abs/1911.11929)

+

## Abstract

+

Neural networks have enabled state-of-the-art approaches to achieve incredible results on computer vision tasks such as object detection. However, such success greatly relies on costly computation resources, which hinders people with cheap devices from appreciating the advanced technology. In this paper, we propose Cross Stage Partial Network (CSPNet) to mitigate the problem that previous works require heavy inference computations from the network architecture perspective. We attribute the problem to the duplicate gradient information within network optimization. The proposed networks respect the variability of the gradients by integrating feature maps from the beginning and the end of a network stage, which, in our experiments, reduces computations by 20% with equivalent or even superior accuracy on the ImageNet dataset, and significantly outperforms state-of-the-art approaches in terms of AP50 on the MS COCO object detection dataset. The CSPNet is easy to implement and general enough to cope with architectures based on ResNet, ResNeXt, and DenseNet. Source code is at this https URL.

+

@@ -17,14 +20,13 @@ Neural networks have enabled state-of-the-art approaches to achieve incredible r

### ImageNet-1k

-| Model | Pretrain | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------:|:------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|

-| CSPDarkNet50\* | From scratch | 27.64 | 5.04 | 80.05 | 95.07 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/cspnet/cspdarknet50_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/cspnet/cspdarknet50_3rdparty_8xb32_in1k_20220329-bd275287.pth) |

-| CSPResNet50\* | From scratch | 21.62 | 3.48 | 79.55 | 94.68 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/cspnet/cspresnet50_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/cspnet/cspresnet50_3rdparty_8xb32_in1k_20220329-dd6dddfb.pth) |

-| CSPResNeXt50\* | From scratch | 20.57 | 3.11 | 79.96 | 94.96 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/cspnet/cspresnext50_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/cspnet/cspresnext50_3rdparty_8xb32_in1k_20220329-2cc84d21.pth) |

-

+| Model | Pretrain | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :------------: | :----------: | :-------: | :------: | :-------: | :-------: | :------------------------------------------------------------------: | :---------------------------------------------------------------------: |

+| CSPDarkNet50\* | From scratch | 27.64 | 5.04 | 80.05 | 95.07 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/cspnet/cspdarknet50_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/cspnet/cspdarknet50_3rdparty_8xb32_in1k_20220329-bd275287.pth) |

+| CSPResNet50\* | From scratch | 21.62 | 3.48 | 79.55 | 94.68 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/cspnet/cspresnet50_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/cspnet/cspresnet50_3rdparty_8xb32_in1k_20220329-dd6dddfb.pth) |

+| CSPResNeXt50\* | From scratch | 20.57 | 3.11 | 79.96 | 94.96 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/cspnet/cspresnext50_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/cspnet/cspresnext50_3rdparty_8xb32_in1k_20220329-2cc84d21.pth) |

-*Models with \* are converted from the [timm repo](https://github.com/rwightman/pytorch-image-models). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

+*Models with * are converted from the [timm repo](https://github.com/rwightman/pytorch-image-models). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

## Citation

diff --git a/configs/deit/README.md b/configs/deit/README.md

index aa3b1544b86..e3103658a2a 100644

--- a/configs/deit/README.md

+++ b/configs/deit/README.md

@@ -1,6 +1,7 @@

# DeiT

> [Training data-efficient image transformers & distillation through attention](https://arxiv.org/abs/2012.12877)

+

## Abstract

@@ -17,19 +18,19 @@ Recently, neural networks purely based on attention were shown to address image

The teacher of the distilled version DeiT is RegNetY-16GF.

-| Model | Pretrain | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------------:|:------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|

-| DeiT-tiny | From scratch | 5.72 | 1.08 | 74.50 | 92.24 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/deit/deit-tiny_pt-4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-tiny_pt-4xb256_in1k_20220218-13b382a0.pth) | [log](https://download.openmmlab.com/mmclassification/v0/deit/deit-tiny_pt-4xb256_in1k_20220218-13b382a0.log.json) |

-| DeiT-tiny distilled\* | From scratch | 5.72 | 1.08 | 74.51 | 91.90 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-tiny-distilled_pt-4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-tiny-distilled_3rdparty_pt-4xb256_in1k_20211216-c429839a.pth) |

-| DeiT-small | From scratch | 22.05 | 4.24 | 80.69 | 95.06 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/deit/deit-small_pt-4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-small_pt-4xb256_in1k_20220218-9425b9bb.pth) | [log](https://download.openmmlab.com/mmclassification/v0/deit/deit-small_pt-4xb256_in1k_20220218-9425b9bb.log.json) |

-| DeiT-small distilled\*| From scratch | 22.05 | 4.24 | 81.17 | 95.40 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-small-distilled_pt-4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-small-distilled_3rdparty_pt-4xb256_in1k_20211216-4de1d725.pth) |

-| DeiT-base | From scratch | 86.57 | 16.86 | 81.76 | 95.81 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/deit/deit-base_pt-16xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base_pt-16xb64_in1k_20220216-db63c16c.pth) | [log](https://download.openmmlab.com/mmclassification/v0/deit/deit-base_pt-16xb64_in1k_20220216-db63c16c.log.json) |

-| DeiT-base\* | From scratch | 86.57 | 16.86 | 81.79 | 95.59 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/deit/deit-base_pt-16xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base_3rdparty_pt-16xb64_in1k_20211124-6f40c188.pth) |

-| DeiT-base distilled\* | From scratch | 86.57 | 16.86 | 83.33 | 96.49 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-base-distilled_pt-16xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base-distilled_3rdparty_pt-16xb64_in1k_20211216-42891296.pth) |

-| DeiT-base 384px\* | ImageNet-1k | 86.86 | 49.37 | 83.04 | 96.31 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-base_ft-16xb32_in1k-384px.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base_3rdparty_ft-16xb32_in1k-384px_20211124-822d02f2.pth) |

-| DeiT-base distilled 384px\* | ImageNet-1k | 86.86 | 49.37 | 85.55 | 97.35 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-base-distilled_ft-16xb32_in1k-384px.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base-distilled_3rdparty_ft-16xb32_in1k-384px_20211216-e48d6000.pth) |

-

-*Models with \* are converted from the [official repo](https://github.com/facebookresearch/deit). The config files of these models are only for validation. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

+| Model | Pretrain | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :-------------------------: | :----------: | :-------: | :------: | :-------: | :-------: | :------------------------------------------------------------: | :--------------------------------------------------------------: |

+| DeiT-tiny | From scratch | 5.72 | 1.08 | 74.50 | 92.24 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/deit/deit-tiny_pt-4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-tiny_pt-4xb256_in1k_20220218-13b382a0.pth) \| [log](https://download.openmmlab.com/mmclassification/v0/deit/deit-tiny_pt-4xb256_in1k_20220218-13b382a0.log.json) |

+| DeiT-tiny distilled\* | From scratch | 5.72 | 1.08 | 74.51 | 91.90 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-tiny-distilled_pt-4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-tiny-distilled_3rdparty_pt-4xb256_in1k_20211216-c429839a.pth) |

+| DeiT-small | From scratch | 22.05 | 4.24 | 80.69 | 95.06 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/deit/deit-small_pt-4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-small_pt-4xb256_in1k_20220218-9425b9bb.pth) \| [log](https://download.openmmlab.com/mmclassification/v0/deit/deit-small_pt-4xb256_in1k_20220218-9425b9bb.log.json) |

+| DeiT-small distilled\* | From scratch | 22.05 | 4.24 | 81.17 | 95.40 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-small-distilled_pt-4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-small-distilled_3rdparty_pt-4xb256_in1k_20211216-4de1d725.pth) |

+| DeiT-base | From scratch | 86.57 | 16.86 | 81.76 | 95.81 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/deit/deit-base_pt-16xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base_pt-16xb64_in1k_20220216-db63c16c.pth) \| [log](https://download.openmmlab.com/mmclassification/v0/deit/deit-base_pt-16xb64_in1k_20220216-db63c16c.log.json) |

+| DeiT-base\* | From scratch | 86.57 | 16.86 | 81.79 | 95.59 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/deit/deit-base_pt-16xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base_3rdparty_pt-16xb64_in1k_20211124-6f40c188.pth) |

+| DeiT-base distilled\* | From scratch | 86.57 | 16.86 | 83.33 | 96.49 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-base-distilled_pt-16xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base-distilled_3rdparty_pt-16xb64_in1k_20211216-42891296.pth) |

+| DeiT-base 384px\* | ImageNet-1k | 86.86 | 49.37 | 83.04 | 96.31 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-base_ft-16xb32_in1k-384px.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base_3rdparty_ft-16xb32_in1k-384px_20211124-822d02f2.pth) |

+| DeiT-base distilled 384px\* | ImageNet-1k | 86.86 | 49.37 | 85.55 | 97.35 | [config](https://github.com/open-mmlab/mmclassification/tree/master/configs/deit/deit-base-distilled_ft-16xb32_in1k-384px.py) | [model](https://download.openmmlab.com/mmclassification/v0/deit/deit-base-distilled_3rdparty_ft-16xb32_in1k-384px_20211216-e48d6000.pth) |

+

+*Models with * are converted from the [official repo](https://github.com/facebookresearch/deit). The config files of these models are only for validation. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

```{warning}

MMClassification doesn't support training the distilled version DeiT.

diff --git a/configs/densenet/README.md b/configs/densenet/README.md

index 77dfa2987d9..f07f25c9fdb 100644

--- a/configs/densenet/README.md

+++ b/configs/densenet/README.md

@@ -1,6 +1,7 @@

# DenseNet

> [Densely Connected Convolutional Networks](https://arxiv.org/abs/1608.06993)

+

## Abstract

@@ -15,15 +16,14 @@ Recent work has shown that convolutional networks can be substantially deeper, m

### ImageNet-1k

-| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|

-| DenseNet121\* | 7.98 | 2.88 | 74.96 | 92.21 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/densenet/densenet121_4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/densenet/densenet121_4xb256_in1k_20220426-07450f99.pth) |

-| DenseNet169\* | 14.15 | 3.42 | 76.08 | 93.11 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/densenet/densenet169_4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/densenet/densenet169_4xb256_in1k_20220426-a2889902.pth) |

-| DenseNet201\* | 20.01 | 4.37 | 77.32 | 93.64 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/densenet/densenet201_4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/densenet/densenet201_4xb256_in1k_20220426-05cae4ef.pth) |

-| DenseNet161\* | 28.68 | 7.82 | 77.61 | 93.83 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/densenet/densenet161_4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/densenet/densenet161_4xb256_in1k_20220426-ee6a80a9.pth) |

-

-*Models with \* are converted from [pytorch](https://pytorch.org/vision/stable/models.html), guided by [original repo](https://github.com/liuzhuang13/DenseNet). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

+| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :-----------: | :-------: | :------: | :-------: | :-------: | :-------------------------------------------------------------------------: | :---------------------------------------------------------------------------: |

+| DenseNet121\* | 7.98 | 2.88 | 74.96 | 92.21 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/densenet/densenet121_4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/densenet/densenet121_4xb256_in1k_20220426-07450f99.pth) |

+| DenseNet169\* | 14.15 | 3.42 | 76.08 | 93.11 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/densenet/densenet169_4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/densenet/densenet169_4xb256_in1k_20220426-a2889902.pth) |

+| DenseNet201\* | 20.01 | 4.37 | 77.32 | 93.64 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/densenet/densenet201_4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/densenet/densenet201_4xb256_in1k_20220426-05cae4ef.pth) |

+| DenseNet161\* | 28.68 | 7.82 | 77.61 | 93.83 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/densenet/densenet161_4xb256_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/densenet/densenet161_4xb256_in1k_20220426-ee6a80a9.pth) |

+*Models with * are converted from [pytorch](https://pytorch.org/vision/stable/models.html), guided by [original repo](https://github.com/liuzhuang13/DenseNet). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

## Citation

diff --git a/configs/efficientnet/README.md b/configs/efficientnet/README.md

index 846ff564e2b..832f5c6b2f9 100644

--- a/configs/efficientnet/README.md

+++ b/configs/efficientnet/README.md

@@ -1,6 +1,7 @@

# EfficientNet

> [Rethinking Model Scaling for Convolutional Neural Networks](https://arxiv.org/abs/1905.11946v5)

+

## Abstract

@@ -19,33 +20,33 @@ In the result table, AA means trained with AutoAugment pre-processing, more deta

Note: In MMClassification, we support training with AutoAugment, don't support AdvProp by now.

-| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|

-| EfficientNet-B0\* | 5.29 | 0.02 | 76.74 | 93.17 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b0_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b0_3rdparty_8xb32_in1k_20220119-a7e2a0b1.pth) |

-| EfficientNet-B0 (AA)\* | 5.29 | 0.02 | 77.26 | 93.41 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b0_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b0_3rdparty_8xb32-aa_in1k_20220119-8d939117.pth) |

-| EfficientNet-B0 (AA + AdvProp)\* | 5.29 | 0.02 | 77.53 | 93.61 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b0_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b0_3rdparty_8xb32-aa-advprop_in1k_20220119-26434485.pth) |

-| EfficientNet-B1\* | 7.79 | 0.03 | 78.68 | 94.28 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b1_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b1_3rdparty_8xb32_in1k_20220119-002556d9.pth) |

-| EfficientNet-B1 (AA)\* | 7.79 | 0.03 | 79.20 | 94.42 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b1_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b1_3rdparty_8xb32-aa_in1k_20220119-619d8ae3.pth) |

-| EfficientNet-B1 (AA + AdvProp)\* | 7.79 | 0.03 | 79.52 | 94.43 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b1_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b1_3rdparty_8xb32-aa-advprop_in1k_20220119-5715267d.pth) |

-| EfficientNet-B2\* | 9.11 | 0.03 | 79.64 | 94.80 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b2_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b2_3rdparty_8xb32_in1k_20220119-ea374a30.pth) |

-| EfficientNet-B2 (AA)\* | 9.11 | 0.03 | 80.21 | 94.96 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b2_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b2_3rdparty_8xb32-aa_in1k_20220119-dd61e80b.pth) |

-| EfficientNet-B2 (AA + AdvProp)\* | 9.11 | 0.03 | 80.45 | 95.07 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b2_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b2_3rdparty_8xb32-aa-advprop_in1k_20220119-1655338a.pth) |

-| EfficientNet-B3\* | 12.23 | 0.06 | 81.01 | 95.34 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b3_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b3_3rdparty_8xb32_in1k_20220119-4b4d7487.pth) |

-| EfficientNet-B3 (AA)\* | 12.23 | 0.06 | 81.58 | 95.67 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b3_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b3_3rdparty_8xb32-aa_in1k_20220119-5b4887a0.pth) |

-| EfficientNet-B3 (AA + AdvProp)\* | 12.23 | 0.06 | 81.81 | 95.69 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b3_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b3_3rdparty_8xb32-aa-advprop_in1k_20220119-53b41118.pth) |

-| EfficientNet-B4\* | 19.34 | 0.12 | 82.57 | 96.09 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b4_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b4_3rdparty_8xb32_in1k_20220119-81fd4077.pth) |

-| EfficientNet-B4 (AA)\* | 19.34 | 0.12 | 82.95 | 96.26 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b4_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b4_3rdparty_8xb32-aa_in1k_20220119-45b8bd2b.pth) |

-| EfficientNet-B4 (AA + AdvProp)\* | 19.34 | 0.12 | 83.25 | 96.44 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b4_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b4_3rdparty_8xb32-aa-advprop_in1k_20220119-38c2238c.pth) |

-| EfficientNet-B5\* | 30.39 | 0.24 | 83.18 | 96.47 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b5_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b5_3rdparty_8xb32_in1k_20220119-e9814430.pth) |

-| EfficientNet-B5 (AA)\* | 30.39 | 0.24 | 83.82 | 96.76 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b5_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b5_3rdparty_8xb32-aa_in1k_20220119-2cab8b78.pth) |

-| EfficientNet-B5 (AA + AdvProp)\* | 30.39 | 0.24 | 84.21 | 96.98 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b5_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b5_3rdparty_8xb32-aa-advprop_in1k_20220119-f57a895a.pth) |

-| EfficientNet-B6 (AA)\* | 43.04 | 0.41 | 84.05 | 96.82 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b6_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b6_3rdparty_8xb32-aa_in1k_20220119-45b03310.pth) |

-| EfficientNet-B6 (AA + AdvProp)\* | 43.04 | 0.41 | 84.74 | 97.14 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b6_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b6_3rdparty_8xb32-aa-advprop_in1k_20220119-bfe3485e.pth) |

-| EfficientNet-B7 (AA)\* | 66.35 | 0.72 | 84.38 | 96.88 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b7_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b7_3rdparty_8xb32-aa_in1k_20220119-bf03951c.pth) |

-| EfficientNet-B7 (AA + AdvProp)\* | 66.35 | 0.72 | 85.14 | 97.23 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b7_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b7_3rdparty_8xb32-aa-advprop_in1k_20220119-c6dbff10.pth) |

-| EfficientNet-B8 (AA + AdvProp)\* | 87.41 | 1.09 | 85.38 | 97.28 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b8_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b8_3rdparty_8xb32-aa-advprop_in1k_20220119-297ce1b7.pth) |

-

-*Models with \* are converted from the [official repo](https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

+| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :------------------------------: | :-------: | :------: | :-------: | :-------: | :---------------------------------------------------------------: | :------------------------------------------------------------------: |

+| EfficientNet-B0\* | 5.29 | 0.02 | 76.74 | 93.17 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b0_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b0_3rdparty_8xb32_in1k_20220119-a7e2a0b1.pth) |

+| EfficientNet-B0 (AA)\* | 5.29 | 0.02 | 77.26 | 93.41 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b0_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b0_3rdparty_8xb32-aa_in1k_20220119-8d939117.pth) |

+| EfficientNet-B0 (AA + AdvProp)\* | 5.29 | 0.02 | 77.53 | 93.61 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b0_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b0_3rdparty_8xb32-aa-advprop_in1k_20220119-26434485.pth) |

+| EfficientNet-B1\* | 7.79 | 0.03 | 78.68 | 94.28 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b1_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b1_3rdparty_8xb32_in1k_20220119-002556d9.pth) |

+| EfficientNet-B1 (AA)\* | 7.79 | 0.03 | 79.20 | 94.42 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b1_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b1_3rdparty_8xb32-aa_in1k_20220119-619d8ae3.pth) |

+| EfficientNet-B1 (AA + AdvProp)\* | 7.79 | 0.03 | 79.52 | 94.43 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b1_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b1_3rdparty_8xb32-aa-advprop_in1k_20220119-5715267d.pth) |

+| EfficientNet-B2\* | 9.11 | 0.03 | 79.64 | 94.80 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b2_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b2_3rdparty_8xb32_in1k_20220119-ea374a30.pth) |

+| EfficientNet-B2 (AA)\* | 9.11 | 0.03 | 80.21 | 94.96 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b2_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b2_3rdparty_8xb32-aa_in1k_20220119-dd61e80b.pth) |

+| EfficientNet-B2 (AA + AdvProp)\* | 9.11 | 0.03 | 80.45 | 95.07 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b2_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b2_3rdparty_8xb32-aa-advprop_in1k_20220119-1655338a.pth) |

+| EfficientNet-B3\* | 12.23 | 0.06 | 81.01 | 95.34 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b3_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b3_3rdparty_8xb32_in1k_20220119-4b4d7487.pth) |

+| EfficientNet-B3 (AA)\* | 12.23 | 0.06 | 81.58 | 95.67 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b3_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b3_3rdparty_8xb32-aa_in1k_20220119-5b4887a0.pth) |

+| EfficientNet-B3 (AA + AdvProp)\* | 12.23 | 0.06 | 81.81 | 95.69 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b3_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b3_3rdparty_8xb32-aa-advprop_in1k_20220119-53b41118.pth) |

+| EfficientNet-B4\* | 19.34 | 0.12 | 82.57 | 96.09 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b4_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b4_3rdparty_8xb32_in1k_20220119-81fd4077.pth) |

+| EfficientNet-B4 (AA)\* | 19.34 | 0.12 | 82.95 | 96.26 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b4_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b4_3rdparty_8xb32-aa_in1k_20220119-45b8bd2b.pth) |

+| EfficientNet-B4 (AA + AdvProp)\* | 19.34 | 0.12 | 83.25 | 96.44 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b4_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b4_3rdparty_8xb32-aa-advprop_in1k_20220119-38c2238c.pth) |

+| EfficientNet-B5\* | 30.39 | 0.24 | 83.18 | 96.47 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b5_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b5_3rdparty_8xb32_in1k_20220119-e9814430.pth) |

+| EfficientNet-B5 (AA)\* | 30.39 | 0.24 | 83.82 | 96.76 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b5_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b5_3rdparty_8xb32-aa_in1k_20220119-2cab8b78.pth) |

+| EfficientNet-B5 (AA + AdvProp)\* | 30.39 | 0.24 | 84.21 | 96.98 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b5_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b5_3rdparty_8xb32-aa-advprop_in1k_20220119-f57a895a.pth) |

+| EfficientNet-B6 (AA)\* | 43.04 | 0.41 | 84.05 | 96.82 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b6_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b6_3rdparty_8xb32-aa_in1k_20220119-45b03310.pth) |

+| EfficientNet-B6 (AA + AdvProp)\* | 43.04 | 0.41 | 84.74 | 97.14 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b6_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b6_3rdparty_8xb32-aa-advprop_in1k_20220119-bfe3485e.pth) |

+| EfficientNet-B7 (AA)\* | 66.35 | 0.72 | 84.38 | 96.88 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b7_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b7_3rdparty_8xb32-aa_in1k_20220119-bf03951c.pth) |

+| EfficientNet-B7 (AA + AdvProp)\* | 66.35 | 0.72 | 85.14 | 97.23 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b7_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b7_3rdparty_8xb32-aa-advprop_in1k_20220119-c6dbff10.pth) |

+| EfficientNet-B8 (AA + AdvProp)\* | 87.41 | 1.09 | 85.38 | 97.28 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/efficientnet/efficientnet-b8_8xb32-01norm_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/efficientnet/efficientnet-b8_3rdparty_8xb32-aa-advprop_in1k_20220119-297ce1b7.pth) |

+

+*Models with * are converted from the [official repo](https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

## Citation

diff --git a/configs/hrnet/README.md b/configs/hrnet/README.md

index e3144cc4a23..0a30ccd16d4 100644

--- a/configs/hrnet/README.md

+++ b/configs/hrnet/README.md

@@ -1,6 +1,7 @@

# HRNet

> [Deep High-Resolution Representation Learning for Visual Recognition](https://arxiv.org/abs/1908.07919v2)

+

## Abstract

@@ -15,19 +16,19 @@ High-resolution representations are essential for position-sensitive vision prob

## ImageNet-1k

-| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|

-| HRNet-W18\* | 21.30 | 4.33 | 76.75 | 93.44 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w18_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w18_3rdparty_8xb32_in1k_20220120-0c10b180.pth) |

-| HRNet-W30\* | 37.71 | 8.17 | 78.19 | 94.22 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w30_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w30_3rdparty_8xb32_in1k_20220120-8aa3832f.pth) |

-| HRNet-W32\* | 41.23 | 8.99 | 78.44 | 94.19 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w32_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w32_3rdparty_8xb32_in1k_20220120-c394f1ab.pth) |

-| HRNet-W40\* | 57.55 | 12.77 | 78.94 | 94.47 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w40_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w40_3rdparty_8xb32_in1k_20220120-9a2dbfc5.pth) |

-| HRNet-W44\* | 67.06 | 14.96 | 78.88 | 94.37 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w44_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w44_3rdparty_8xb32_in1k_20220120-35d07f73.pth) |

-| HRNet-W48\* | 77.47 | 17.36 | 79.32 | 94.52 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w48_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w48_3rdparty_8xb32_in1k_20220120-e555ef50.pth) |

-| HRNet-W64\* | 128.06 | 29.00 | 79.46 | 94.65 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w64_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w64_3rdparty_8xb32_in1k_20220120-19126642.pth) |

-| HRNet-W18 (ssld)\* | 21.30 | 4.33 | 81.06 | 95.70 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w18_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w18_3rdparty_8xb32-ssld_in1k_20220120-455f69ea.pth) |

-| HRNet-W48 (ssld)\* | 77.47 | 17.36 | 83.63 | 96.79 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w48_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w48_3rdparty_8xb32-ssld_in1k_20220120-d0459c38.pth) |

-

-*Models with \* are converted from the [official repo](https://github.com/HRNet/HRNet-Image-Classification). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

+| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :----------------: | :-------: | :------: | :-------: | :-------: | :----------------------------------------------------------------------: | :-------------------------------------------------------------------------: |

+| HRNet-W18\* | 21.30 | 4.33 | 76.75 | 93.44 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w18_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w18_3rdparty_8xb32_in1k_20220120-0c10b180.pth) |

+| HRNet-W30\* | 37.71 | 8.17 | 78.19 | 94.22 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w30_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w30_3rdparty_8xb32_in1k_20220120-8aa3832f.pth) |

+| HRNet-W32\* | 41.23 | 8.99 | 78.44 | 94.19 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w32_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w32_3rdparty_8xb32_in1k_20220120-c394f1ab.pth) |

+| HRNet-W40\* | 57.55 | 12.77 | 78.94 | 94.47 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w40_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w40_3rdparty_8xb32_in1k_20220120-9a2dbfc5.pth) |

+| HRNet-W44\* | 67.06 | 14.96 | 78.88 | 94.37 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w44_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w44_3rdparty_8xb32_in1k_20220120-35d07f73.pth) |

+| HRNet-W48\* | 77.47 | 17.36 | 79.32 | 94.52 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w48_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w48_3rdparty_8xb32_in1k_20220120-e555ef50.pth) |

+| HRNet-W64\* | 128.06 | 29.00 | 79.46 | 94.65 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w64_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w64_3rdparty_8xb32_in1k_20220120-19126642.pth) |

+| HRNet-W18 (ssld)\* | 21.30 | 4.33 | 81.06 | 95.70 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w18_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w18_3rdparty_8xb32-ssld_in1k_20220120-455f69ea.pth) |

+| HRNet-W48 (ssld)\* | 77.47 | 17.36 | 83.63 | 96.79 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/hrnet/hrnet-w48_4xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/hrnet/hrnet-w48_3rdparty_8xb32-ssld_in1k_20220120-d0459c38.pth) |

+

+*Models with * are converted from the [official repo](https://github.com/HRNet/HRNet-Image-Classification). The config files of these models are only for inference. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

## Citation

diff --git a/configs/lenet/README.md b/configs/lenet/README.md

index 241bedab173..2cd68eac42e 100644

--- a/configs/lenet/README.md

+++ b/configs/lenet/README.md

@@ -1,6 +1,7 @@

# LeNet

> [Backpropagation Applied to Handwritten Zip Code Recognition](https://ieeexplore.ieee.org/document/6795724)

+

## Abstract

diff --git a/configs/mlp_mixer/README.md b/configs/mlp_mixer/README.md

index dc8866e8447..5ec98871b6d 100644

--- a/configs/mlp_mixer/README.md

+++ b/configs/mlp_mixer/README.md

@@ -1,6 +1,7 @@

# Mlp-Mixer

> [MLP-Mixer: An all-MLP Architecture for Vision](https://arxiv.org/abs/2105.01601)

+

## Abstract

@@ -15,12 +16,12 @@ Convolutional Neural Networks (CNNs) are the go-to model for computer vision. Re

### ImageNet-1k

-| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:--------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|

-| Mixer-B/16\* | 59.88 | 12.61 | 76.68 | 92.25 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mlp_mixer/mlp-mixer-base-p16_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mlp-mixer/mixer-base-p16_3rdparty_64xb64_in1k_20211124-1377e3e0.pth) |

-| Mixer-L/16\* | 208.2 | 44.57 | 72.34 | 88.02 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mlp_mixer/mlp-mixer-large-p16_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mlp-mixer/mixer-large-p16_3rdparty_64xb64_in1k_20211124-5a2519d2.pth) |

+| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :----------: | :-------: | :------: | :-------: | :-------: | :-------------------------------------------------------------------------: | :----------------------------------------------------------------------------: |

+| Mixer-B/16\* | 59.88 | 12.61 | 76.68 | 92.25 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mlp_mixer/mlp-mixer-base-p16_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mlp-mixer/mixer-base-p16_3rdparty_64xb64_in1k_20211124-1377e3e0.pth) |

+| Mixer-L/16\* | 208.2 | 44.57 | 72.34 | 88.02 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mlp_mixer/mlp-mixer-large-p16_64xb64_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mlp-mixer/mixer-large-p16_3rdparty_64xb64_in1k_20211124-5a2519d2.pth) |

-*Models with \* are converted from [timm](https://github.com/rwightman/pytorch-image-models/blob/master/timm/models/mlp_mixer.py). The config files of these models are only for validation. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

+*Models with * are converted from [timm](https://github.com/rwightman/pytorch-image-models/blob/master/timm/models/mlp_mixer.py). The config files of these models are only for validation. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

## Citation

diff --git a/configs/mobilenet_v2/README.md b/configs/mobilenet_v2/README.md

index 9a0cd8a6549..675c8dd4d43 100644

--- a/configs/mobilenet_v2/README.md

+++ b/configs/mobilenet_v2/README.md

@@ -1,6 +1,7 @@

# MobileNet V2

> [MobileNetV2: Inverted Residuals and Linear Bottlenecks](https://arxiv.org/abs/1801.04381)

+

## Abstract

@@ -17,9 +18,9 @@ The MobileNetV2 architecture is based on an inverted residual structure where th

### ImageNet-1k

-| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------------:|:---------:|:--------:|:---------:|:---------:|:---------:|:--------:|

-| MobileNet V2 | 3.5 | 0.319 | 71.86 | 90.42 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mobilenet_v2/mobilenet-v2_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mobilenet_v2/mobilenet_v2_batch256_imagenet_20200708-3b2dc3af.pth) | [log](https://download.openmmlab.com/mmclassification/v0/mobilenet_v2/mobilenet_v2_batch256_imagenet_20200708-3b2dc3af.log.json) |

+| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :----------: | :-------: | :------: | :-------: | :-------: | :-------------------------------------------------------------------------: | :----------------------------------------------------------------------------: |

+| MobileNet V2 | 3.5 | 0.319 | 71.86 | 90.42 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mobilenet_v2/mobilenet-v2_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mobilenet_v2/mobilenet_v2_batch256_imagenet_20200708-3b2dc3af.pth) \| [log](https://download.openmmlab.com/mmclassification/v0/mobilenet_v2/mobilenet_v2_batch256_imagenet_20200708-3b2dc3af.log.json) |

## Citation

diff --git a/configs/mobilenet_v3/README.md b/configs/mobilenet_v3/README.md

index 36392b91c3b..737c4d32ec0 100644

--- a/configs/mobilenet_v3/README.md

+++ b/configs/mobilenet_v3/README.md

@@ -1,11 +1,12 @@

# MobileNet V3

> [Searching for MobileNetV3](https://arxiv.org/abs/1905.02244)

+

## Abstract

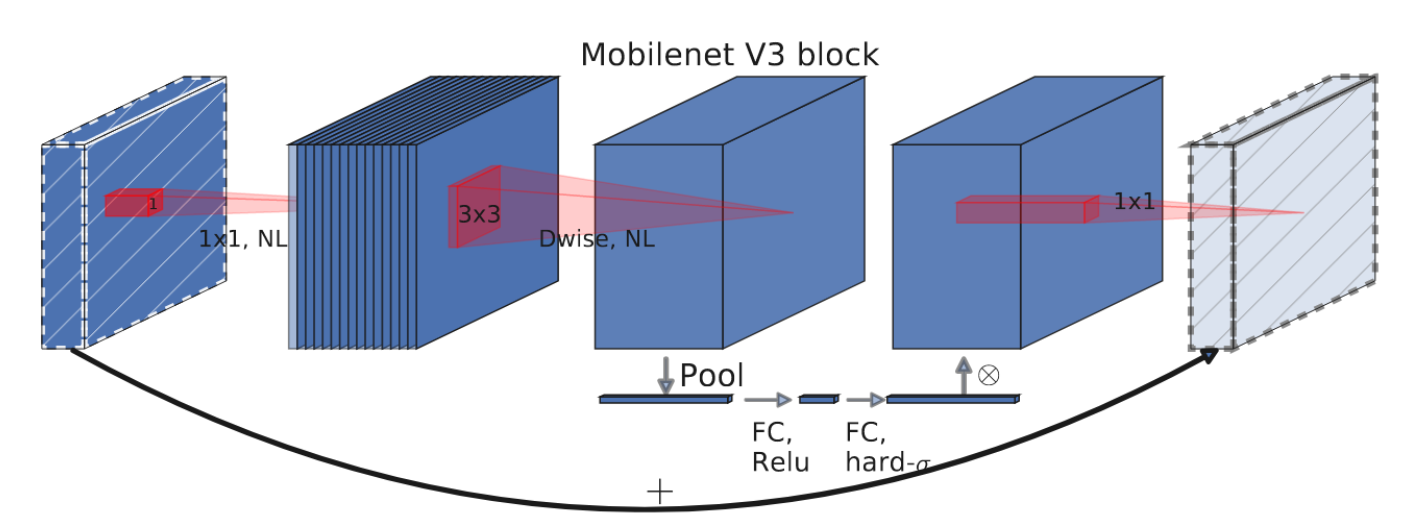

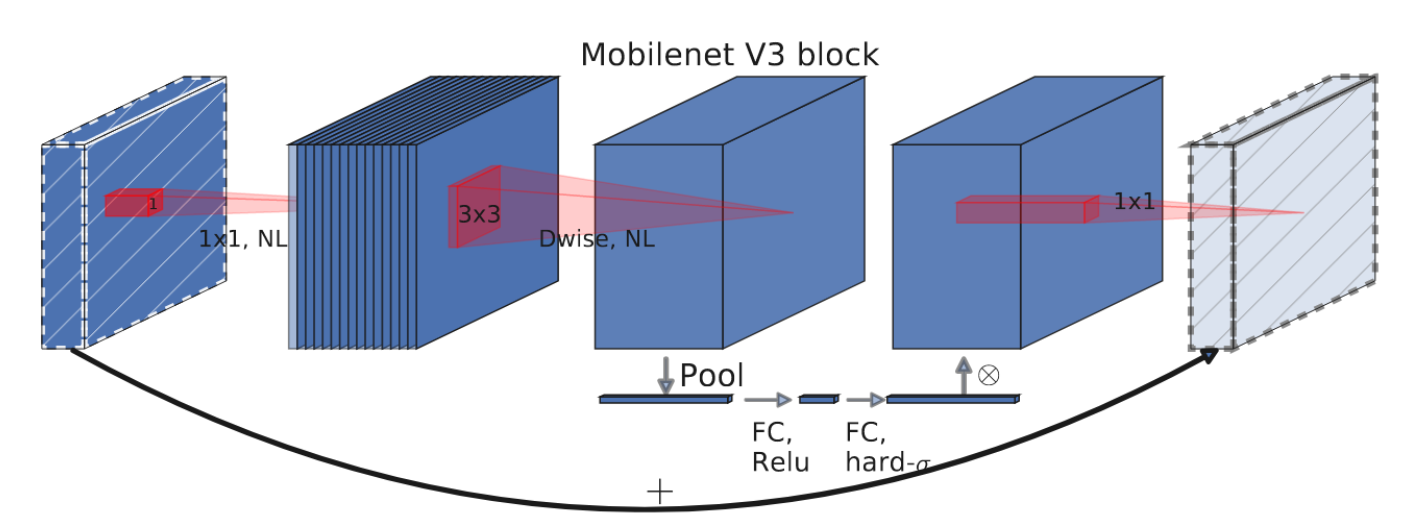

-We present the next generation of MobileNets based on a combination of complementary search techniques as well as a novel architecture design. MobileNetV3 is tuned to mobile phone CPUs through a combination of hardware-aware network architecture search (NAS) complemented by the NetAdapt algorithm and then subsequently improved through novel architecture advances. This paper starts the exploration of how automated search algorithms and network design can work together to harness complementary approaches improving the overall state of the art. Through this process we create two new MobileNet models for release: MobileNetV3-Large and MobileNetV3-Small which are targeted for high and low resource use cases. These models are then adapted and applied to the tasks of object detection and semantic segmentation. For the task of semantic segmentation (or any dense pixel prediction), we propose a new efficient segmentation decoder Lite Reduced Atrous Spatial Pyramid Pooling (LR-ASPP). We achieve new state of the art results for mobile classification, detection and segmentation. MobileNetV3-Large is 3.2\% more accurate on ImageNet classification while reducing latency by 15\% compared to MobileNetV2. MobileNetV3-Small is 4.6\% more accurate while reducing latency by 5\% compared to MobileNetV2. MobileNetV3-Large detection is 25\% faster at roughly the same accuracy as MobileNetV2 on COCO detection. MobileNetV3-Large LR-ASPP is 30\% faster than MobileNetV2 R-ASPP at similar accuracy for Cityscapes segmentation.

+We present the next generation of MobileNets based on a combination of complementary search techniques as well as a novel architecture design. MobileNetV3 is tuned to mobile phone CPUs through a combination of hardware-aware network architecture search (NAS) complemented by the NetAdapt algorithm and then subsequently improved through novel architecture advances. This paper starts the exploration of how automated search algorithms and network design can work together to harness complementary approaches improving the overall state of the art. Through this process we create two new MobileNet models for release: MobileNetV3-Large and MobileNetV3-Small which are targeted for high and low resource use cases. These models are then adapted and applied to the tasks of object detection and semantic segmentation. For the task of semantic segmentation (or any dense pixel prediction), we propose a new efficient segmentation decoder Lite Reduced Atrous Spatial Pyramid Pooling (LR-ASPP). We achieve new state of the art results for mobile classification, detection and segmentation. MobileNetV3-Large is 3.2% more accurate on ImageNet classification while reducing latency by 15% compared to MobileNetV2. MobileNetV3-Small is 4.6% more accurate while reducing latency by 5% compared to MobileNetV2. MobileNetV3-Large detection is 25% faster at roughly the same accuracy as MobileNetV2 on COCO detection. MobileNetV3-Large LR-ASPP is 30% faster than MobileNetV2 R-ASPP at similar accuracy for Cityscapes segmentation.

@@ -15,12 +16,12 @@ We present the next generation of MobileNets based on a combination of complemen

### ImageNet-1k

-| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|

-| MobileNetV3-Small\* | 2.54 | 0.06 | 67.66 | 87.41 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mobilenet_v3/mobilenet-v3-small_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mobilenet_v3/convert/mobilenet_v3_small-8427ecf0.pth) |

-| MobileNetV3-Large\* | 5.48 | 0.23 | 74.04 | 91.34 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mobilenet_v3/mobilenet-v3-large_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mobilenet_v3/convert/mobilenet_v3_large-3ea3c186.pth) |

+| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

+| :-----------------: | :-------: | :------: | :-------: | :-------: | :----------------------------------------------------------------------: | :------------------------------------------------------------------------: |

+| MobileNetV3-Small\* | 2.54 | 0.06 | 67.66 | 87.41 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mobilenet_v3/mobilenet-v3-small_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mobilenet_v3/convert/mobilenet_v3_small-8427ecf0.pth) |

+| MobileNetV3-Large\* | 5.48 | 0.23 | 74.04 | 91.34 | [config](https://github.com/open-mmlab/mmclassification/blob/master/configs/mobilenet_v3/mobilenet-v3-large_8xb32_in1k.py) | [model](https://download.openmmlab.com/mmclassification/v0/mobilenet_v3/convert/mobilenet_v3_large-3ea3c186.pth) |

-*Models with \* are converted from [torchvision](https://pytorch.org/vision/stable/_modules/torchvision/models/mobilenetv3.html). The config files of these models are only for validation. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

+*Models with * are converted from [torchvision](https://pytorch.org/vision/stable/_modules/torchvision/models/mobilenetv3.html). The config files of these models are only for validation. We don't ensure these config files' training accuracy and welcome you to contribute your reproduction results.*

## Citation

diff --git a/configs/poolformer/README.md b/configs/poolformer/README.md

index ed1a0664060..cc557e107df 100644

--- a/configs/poolformer/README.md

+++ b/configs/poolformer/README.md

@@ -1,6 +1,7 @@

# PoolFormer

> [MetaFormer is Actually What You Need for Vision](https://arxiv.org/abs/2111.11418)

+

## Abstract

@@ -15,15 +16,15 @@ Transformers have shown great potential in computer vision tasks. A common belie

### ImageNet-1k

-| Model | Params(M) | Flops(G) | Top-1 (%) | Top-5 (%) | Config | Download |

-|:---------------------:|:---------:|:--------:|:---------:|:---------:|:------:|:--------:|