The ongoing consolidation in AI is incredible. Thread: When I started ~decade ago vision, speech, natural language, reinforcement learning, etc. were completely separate; You couldn't read papers across areas - the approaches were completely different, often not even ML based. In 2010s all of these areas started to transition 1) to machine learning and specifically 2) neural nets. The architectures were diverse but at least the papers started to read more similar, all of them utilizing large datasets and optimizing neural nets.

But as of approx. last two years, even the neural net architectures across all areas are starting to look identical - a Transformer (definable in ~200 lines of PyTorch https://github.com/karpathy/minGPT/blob/master/mingpt/model.py), with very minor differences. Either as a strong baseline or (often) state of the art. You can feed it sequences of words. Or sequences of image patches. Or sequences of speech pieces. Or sequences of (state, action, reward) in reinforcement learning. You can throw in arbitrary other tokens into the conditioning set - an extremely simple/flexible modeling framework. Even within areas (like vision), there used to be some differences in how you do classification, segmentation, detection, generation, but all of these are also being converted to the same framework. E.g. for detection take sequence of patches, output sequence of bounding boxes. The distinguishing features now mostly include 1) the data, and 2) the Input/Output spec that maps your problem into and out of a sequence of vectors, and sometimes 3) the type of positional encoder and problem-specific structured sparsity pattern in the attention mask.

So even though I'm technically in vision, papers, people and ideas across all of AI are suddenly extremely relevant. Everyone is working with essentially the same model, so most improvements and ideas can "copy paste" rapidly across all of AI. As many others have noticed and pointed out, the neocortex has a highly uniform architecture too across all of its input modalities. Perhaps nature has stumbled by a very similar powerful architecture and replicated it in a similar fashion, varying only some of the details. This consolidation in architecture will in turn focus and concentrate software, hardware, and infrastructure, further speeding up progress across AI. Maybe this should have been a blog post. Anyway, exciting times.

reference: https://twitter.com/karpathy/status/1468370605229547522?s=20

-

Full playlist here: https://youtube.com/playlist?list=PL9_jI1bdZmz0hIrNCMQW1YmZysAiIYSSS

Slides: https://brickisland.net/DDGSpring2021/2021/04/20/lecture-18-the-laplace-beltrami-operator/

Course webpage with notes, coding assignments, etc.: http://geometry.cs.cmu.edu/ddg

reference: https://twitter.com/keenanisalive/status/1466046196858953728?s=20

-

Check out the SIGGRAPH Frontiers event "A Conversation With..." this Fri/Sat! Star-struck

A series of chats focused on Computer Graphics and Interactive Techniques.

Registration link: https://bit.ly/sa2021cw

reference: https://twitter.com/jbhuang0604/status/1466065916572151810?s=20

-

How to backpropagate through an algorithm? Seems crazy, but this paper shows it's actually possible for a large class of algorithms, such as k-subset, ILP, and many graph algorithms. Watch my (amateur) attempt at an explanation here: https://www.youtube.com/watch?v=W2UT8NjUqrk&ab_channel=YannicKilcher

-

Diffusion Autoencoders: Toward a Meaningful and Decodable Representation abs: https://arxiv.org/abs/2111.15640 project page: https://diff-ae.github.io

can encode any image into a two-part latent code, first part is semantically meaningful and linear, second part captures stochastic details

reference: https://twitter.com/ak92501/status/1465899503928549386?s=20

-

Excited to share two papers appearing at #SIGGRAPHAsia2021, on "Repulsive Curves" and "Repulsive Surfaces". Tons of graphics algorithms find nice distributions of points by minimizing a "repulsive" energy. But what if you need to nicely distribute curves or surfaces?

We take a deep dive into this question, building fast algorithms for optimizing the recently developed "tangent-point energy" from surface theory. Talk video: https://youtube.com/watch?v=dtYGiCpzzbA Repulsive Curves: http://cs.cmu.edu/~kmcrane/Projects/RepulsiveCurves/index.html Repulsive Surfaces: http://cs.cmu.edu/~kmcrane/Projects/RepulsiveSurfaces/index.html

The basic problem? Given a collection of points, curves, or surfaces in space, find a well-distributed arrangement that avoids (self-)intersection. To make things interesting, you also usually have some constraints—like: the geometry is contained inside a bunny!

Why would you want to do this? Well, point repulsion is already used in every major area of computer graphics: from image stippling to mesh generation to density estimation to fluid simulation! But surprisingly little work has been done on repulsive curves & surfaces…

And yet there are all sorts of things you can do with higher-dimensional repulsion—from better graph layouts, to generative modeling, to robotic path planning, to intersection-free modeling & illustration, to artificial tissue design, to unraveling mathematical mysteries! Repulsion goes far beyond (literally) traditional collision-aware design & modeling: rather than slamming on the brakes right before the moment of impact, repulsive optimization is all about finding a harmonious global balance of forces, long before collisions occur. Imagine, for instance, playing a game of pool with charged particles rather than rigid bodies. In contrast to collision, where you can aggressively prune away distant barrier functions, the whole point of repulsive optimization is to get long-range forces into equilibrium. Beyond just dealing with O(n²) interactions, repulsive energies lead to some rich & interesting challenges. For one thing, repulsive energies for points don't nicely generalize to curves & surfaces. For another, you have to deal w/ optimization of "fractional derivatives."

…Rather than regurgitate everything here on Twitter, I'll point again to this talk: https://youtube.com/watch?v=dtYGiCpzzbA Since I know it's easy to get lost in the math & switch off the video, I gave each section a level of difficulty. Don't feel bad about skipping to the fun stuff! You might also be interested in checking out this longer Twitter thread, which also goes deeper into the details for curves: https://twitter.com/keenanisalive/status/1422318272800829440 But the generalization to repulsive surfaces lets us do some really beautiful things like never before. To end with just one cool example, consider this pair of handcuffs in a "linked" and "unlinked" configuration. Do you think it's possible to unlink the handcuffs, without breaking them apart or letting them pass through each other?

If you said "yes," you were right (hey, you had a 50% chance!) But can you show me how? There are lots of beautiful drawings of this counter-intuitive transition—but unless you have a good geometric imagination, they can be pretty hard to follow.

However, we can use repulsive optimization to make this remarkable topological phenomenon come to life. Just minimize tangent-point energy starting in both linked and unlinked configurations, then join the two movies together (the 2nd one playing backwards). Voilà!

The paper/videos have lots of other examples of new & different things you can do with repulsive shape optimization. But the real limitation is our own creativity! I'd love to hear what ideas this sparks for you, and what problems, challenges, & creations it leads to…

reference: https://twitter.com/keenanisalive/status/1468011750608056324?s=20

-

“How I make machines see the 3D world” with Daniel Cremers (TEDxTUM)

reference: https://twitter.com/CSProfKGD/status/1467252802921668608?s=20

-

Excited to share our new @NVIDIAAI #NeurIPS2021 work DIB-R++. We jointly predict 3D shape, texture, lighting as well as material from monocular photographs!

reference: https://twitter.com/ChenWenzheng/status/1468414252868382720?s=20

-

Upcoming IJCV survey paper on Intrinsic Images! Although the Lambertian assumption is still the basis for many methods, we show how deep learning methods are getting better at estimating physically-correct components of the image formation process.

http://elenagarces.es/projects/SurveyIntrinsicImages

We provide a complete taxonomy of single-image inverse appearance reconstruction methods, explicitly disentangling intrinsic decomposition and inverse rendering methods.

We include an in-depth categorization and description of the different learning strategies used by deep learning-based methods, paying special attention to their assumptions and constraints. We show that current quantitative metrics for intrinsic decomposition lack well-established benchmarks to ease comparisons: smaller error values don't correlate with a more physically-accurate decomposition; similar error values don't necessarily imply similar decomposition. To sum up, and in light of the recent advances in neural rendering and differentiable rendering, we believe that intrinsic decomposition would greatly benefit from a physically-based (i.e., inverse rendering) perspective. Project strongly led by Elena Garces (@ele_g2), in collaboration with Carlos Rodriguez-Pardo (@Crp94) and Jorge Lopez-Moreno.

reference: https://twitter.com/dancasas/status/1469618646926381056?s=20

-

InvGAN: Invertable GANs

abs: https://arxiv.org/abs/2112.04598

Beyond the computational advantage of model-based inversion, mechanism can reconstruct images that are larger than the training images by tiling with no additional merging needed.

- Michael Black: Learning to invert a GAN is useful to efficiently embed a real image in the latent space. But this can also reveal limitations of the GAN in capturing the distribution of real images. Work with @partha_ghosh0 and Sonny Hu @AmazonScience.

reference: https://twitter.com/ak92501/status/1469148220454539267?s=20

-

Introducing our #NeurIPS2021 paper: "SAPE"! An effective policy for incorporating "positional encodings" in coordinate-based #neuralnetworks.

Project page: https://amirhertz.github.io/sape/ Paper: https://arxiv.org/abs/2104.09125

-

Check out our #3DV2021 paper on Event-based Structure Light! We combine an #eventcamera with a laser-point projector to allow accurate depth estimation in highly dynamic scenes! Paper, Code, Data: http://rpg.ifi.uzh.ch/esl.html Kudos to @ManasiMuglikar @guillermogb @Prophesee_ai

-

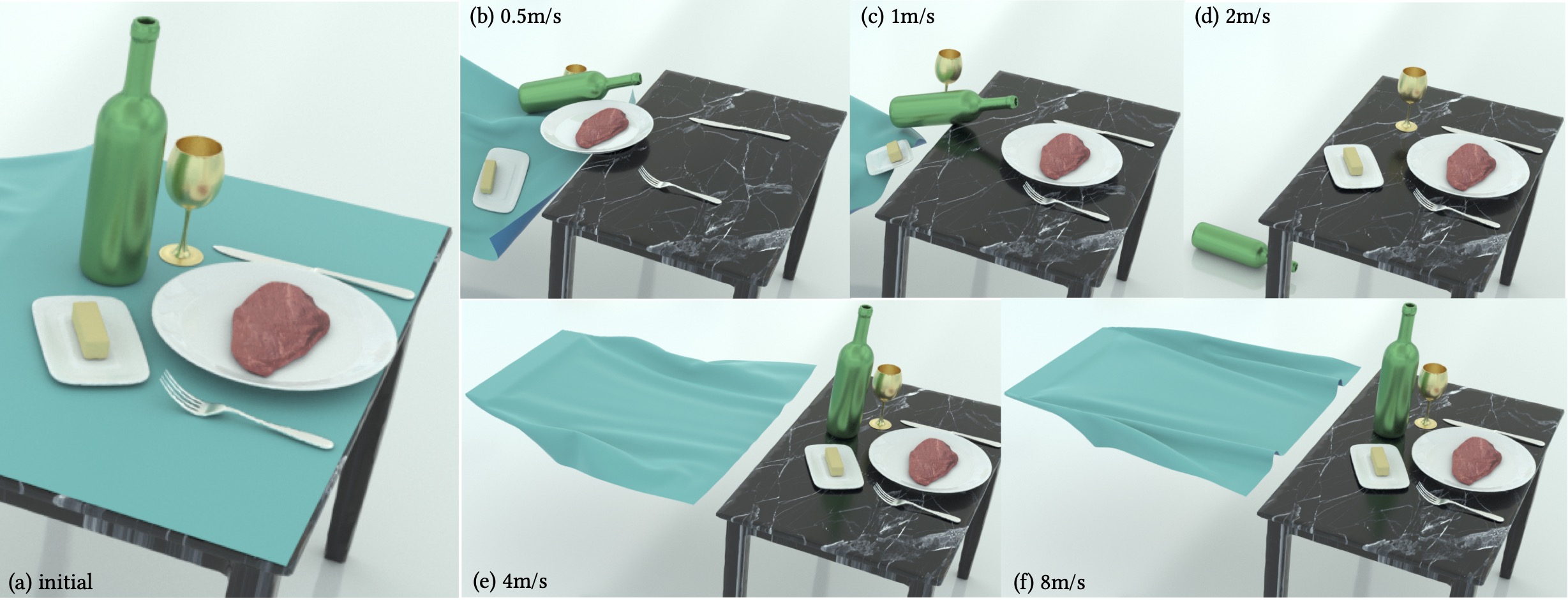

Simulating The Famous Table Cloth Pull! Right-pointing triangleFull video (ours): https://youtu.be/KZhoU_3k0Nk ScrollSource paper: https://ipc-sim.github.io/C-IPC/ #gamedev #twominutepapers #whatatimetobealive #holdontoyourpapers

-

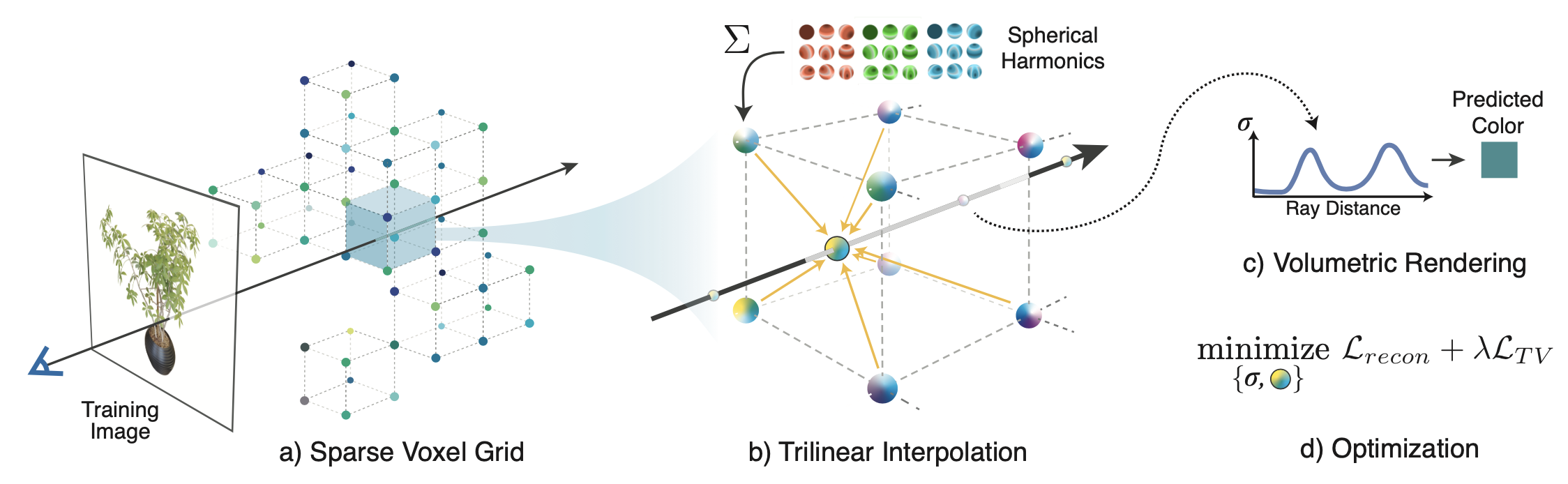

abs: https://arxiv.org/abs/2112.05131 project page: https://alexyu.net/plenoxels/

propose a view-dependent sparse voxel model, Plenoxel, that can optimize to the same fidelity as NeRFs without any neural networks

-

Super excited to share our #immersiveanalytics paper "Labeling Out-of-View Objects in Immersive Analytics to Support Situated Visual Searching" with my amazing co-authors @yalong_yang, Johanna Beyer, @hpfister.

The paper will be published in @ieee_tvcg!https://arxiv.org/abs/2112.03354