-

Notifications

You must be signed in to change notification settings - Fork 199

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

WebSocket performance issue with varying loads #711

Comments

|

@haraldsteinlechner thanks for taking the time to file this bug report; I'll get to it as soon as I get back to civilization (in a couple of days) as I'm currently traveling with limited internet access. I think we will find the source of contention in the buffer manager. |

|

just a quick update on problem 1: we further invastigated and rewrote suave internals in order to use the new i started sicussion here https://gitter.im/dotnet/coreclr although i'm not sure this is the right place. |

|

I have been playing with your POC for a few days now and I here is what I have found so far. With regard to Problem 2

I do not see exactly this. Answering to 32 clients takes about 4.5 seconds but then stays pretty linear after increasing the number of clients. The why it does this does not seem to reside within Suave; as far as I can see the time is spent waiting for As to Problem 1, I have managed to get rid of the long round trip in my tree, I am now figuring out which one of my changes did it. I'll get back to you on this. |

|

cool. hope you can find out problem 1 :) |

|

OK, the source of problem 1, the stuttering, is in the actual POC. Changing the following line in the definition of observer. by makes the periodic long round-trips go away (except for the first one). Which makes sense because Thread.Sleep is actually blocking a thread. Does that work for you ? |

|

Hey, thanks for the update, but I couldn't find the code you're referring to. The only call to Thread.Sleep I could find is in |

|

@krauthaufen i think the reference is for this one: And indeed you're right, this observer thread (hidden behind task) increases likelyhood of the stalls. I tried so much but if i recall correctly it stilll happened in long test runs over night.

Your analysis and our investigations show that we kind of have a deeper problem here. Just to give you some more info:

In case of a deeper problem (which i suspect) i'm sorry we bothered you in this issue. What do you think? @stefanmaierhofer @krauthaufen @aszabo314 @ThomasOrtner EDIT: quick update, there is definitely a bug in |

|

Yeah you are right that we may be hitting a deeper problem. Following the pattern here I rewrote But it still does not solve the problem. |

|

Hi, we figured out that SwitchToNewThread does not actually create a new thread in the netstandard build of fsharp.core. Nonetheless Tasks are not scheduled in a fair way and therefore these delays cannot be completely avoided.as an example we simply ran a parralel.for loop which computes something while requesting something from suave. That way we were able to essentialy block it. requests took up to a minute to complete. Shouldn't suave use a real lightweight thread library instead of TPL? Cheers |

|

some news. the accpet performance is most likely the same problem. in this case suave's client tasks steal work pool threads from the system, making it stoned for new clients. This stems from the fact that scheduling is not fair here. Thus existing ping pong connections block their pool threads and we need to wait until accept continuation can be executed

Clearly, both parts of the program do not compose. please note that the low level implementation using Also, with real threads or proper lighweight threads the expected performance would be: So in conclusion, if a thread pool thread, for whatever reason, e.g.

is blocked, continuations may be blocked for an arbitrary amount of time (typically a multiple of 500ms, being thread injection interval). |

I encountered two (most likely independent) performance problems with suave websockets.

A setup which demonstrates the problem can be achieved as such:

lurkerslurkers).In this setup here the WebSocket example has been modified in order to show the problem in a consice manner.

For demostration, use the test repo, start the modified websocket example and open

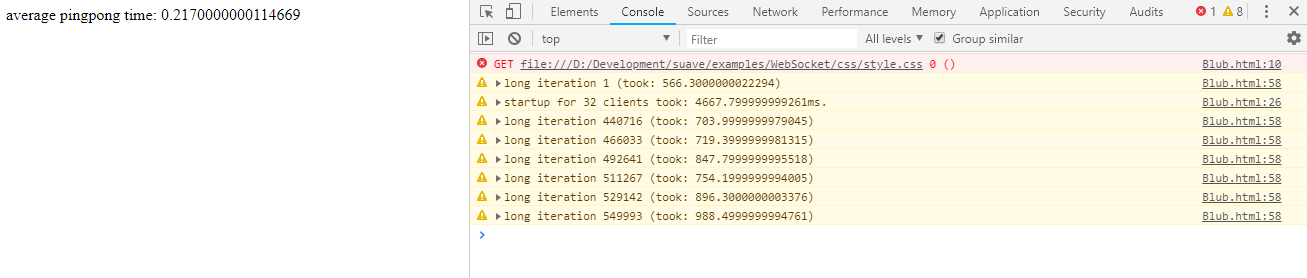

Blub.htmlavailable in the Websocket directory in a web browser.In your chrome you will see:

Problem 1:

As you will see, typically the ping pong takes 0.21ms, however suddenly the application starts printing long iteration warning - showing more than 500ms roundtrip time.

In the output log you will see stuff as such:

On a machine with more cpus or setting higher MinThreads in the threadpool this problem goes away. We will shortly submit an issue to coreclr @krauthaufen

The problem is simply the way async operations work on sockets in combination with varying workloads as simulated by the two kinds of sockets.

Problem 2:

Using many WebSocket connections, beginning from approx 10 take forever.

You can see the connection time for 32 clients (as you will see in Blub.html) in the screenshot which is about 5seconds-20secons.

This is most likely due to problems in concurrent buffer management.

The text was updated successfully, but these errors were encountered: